Set up an AWS VM build infrastructure

This build infrastructure option is only available with Harness CI Team and Enterprise plans.

Currently, this feature is behind the Feature Flag CI_VM_INFRASTRUCTURE. Contact Harness Support to enable the feature.

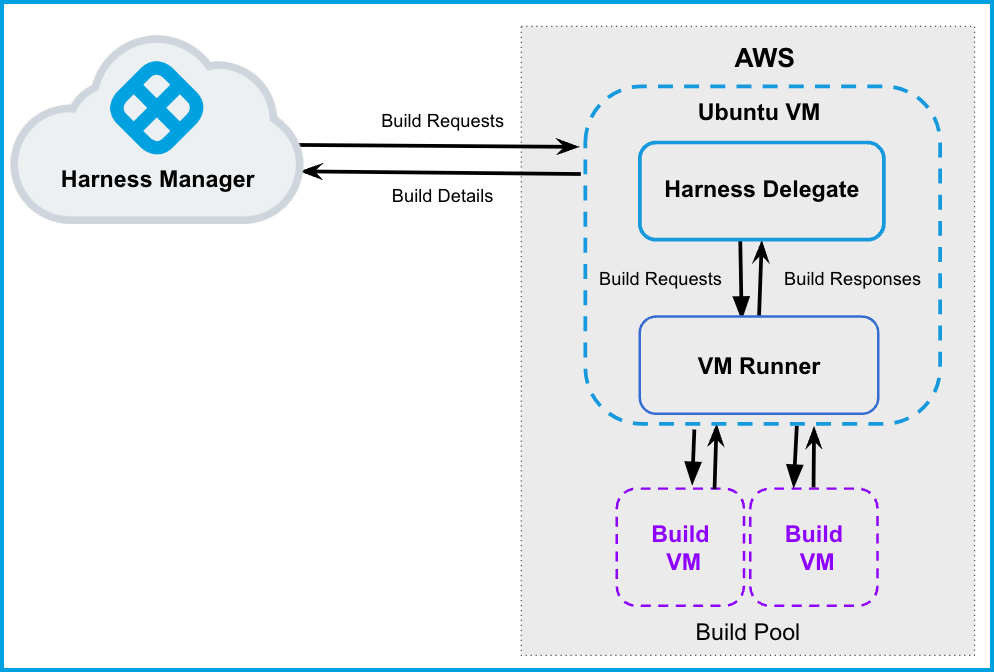

This topic describes how to use AWS VMs as Harness CI build infrastructure. To do this, you will create an Ubuntu VM and install a Harness Delegate and Drone VM Runner on it. The runner creates VMs dynamically in response to CI build requests. You can also configure the runner to hibernate AWS Linux and Windows VMs when they aren't needed.

This is one of several CI build infrastructure options. For example, you can also set up a Kubernetes cluster build infrastructure.

The following diagram illustrates a CI build farm using AWS VMs. The Harness Delegate communicates directly with your Harness instance. The VM runner maintains a pool of VMs for running builds. When the delegate receives a build request, it forwards the request to the runner, which runs the build on an available VM.

This is an advanced configuration. Before beginning, you should be familiar with:

- Harness key concepts

- CI pipeline creation

- Delegates

- CI Build stage settings

- Running pipelines on other build infrastructures

- Drone VM Runners and pools

Prepare AWS

These are the requirements for AWS EC2 configuration.

Machine specs

- The delegate VM must use an Ubuntu AMI that is

t2.largeor greater. - Build VMs (in your VM pool) can be Ubuntu, AWS Linux, or Windows Server 2019 (or higher).

- All machine images must have Docker installed.

Authentication

Decide how you want the runner to authenticate with AWS.

- Access Key and Secret

- IAM Roles

You can use an access key and access secret (AWS secret) for the runner.

For Windows instances, you must add the AdministratorAccess policy to the IAM role associated with the access key and access secret.

You can use IAM profiles instead of access and secret keys.

The delegate VM must use an IAM role that has CRUD permissions on EC2. This role provides the runner with temporary security credentials to create VMs and manage the build pool. For details, go to the Amazon documentation on AmazonEC2FullAccess Managed policy.

To use IAM roles with Windows VMs, go to the AWS documentation for additional configuration for Windows IAM roles for tasks. This additional configuration is required because containers running on Windows can't directly access the IAM profile on the host.

VPC, ports, and security groups

- Set up VPC firewall rules for the build instances on EC2.

- Allow ingress access to ports 22 and 9079. Port 9079 is required for security groups within the VPC.

- You must also open port 3389 if you want to run Windows builds and be able to RDP into your build VMs.

- Create a Security Group. You need the Security Group ID to configure the runner. For information on creating Security Groups, go to the AWS documentation on authorizing inbound traffic for your Linux instances.

Set up the delegate VM

- Log into the EC2 Console and launch the VM instance that will host the Harness Delegate.

- Install Docker on the instance.

- Install Docker Compose on the instance. You must have Docker Compose version 3.7 or higher installed.

- If you are using an IAM role, attach the role to the VM. For instructions, go to the AWS documentation on attaching an IAM role to an instance.

Configure the Drone pool on the AWS VM

The pool.yml file defines the VM spec and pool size for the VM instances used to run the pipeline. A pool is a group of instantiated VMs that are immediately available to run CI pipelines. You can configure multiple pools in pool.yml, such as a Windows VM pool and a Linux VM pool. To avoid unnecessary costs, you can configure pool.yml to hibernate VMs when not in use.

Create a

/runnerfolder on your delegate VM andcdinto it:mkdir /runner

cd /runnerIn the

/runnerfolder, create apool.ymlfile.Modify

pool.ymlas described in the following example. For information about specific settings, go to Pool settings reference.

Example pool.yml

The following pool.yml example defines an Ubuntu pool and a Windows pool.

version: "1"

instances:

- name: ubuntu-ci-pool

default: true

type: amazon

pool: 1

limit: 4

platform:

os: linux

arch: amd64

spec:

account:

region: us-east-2 ## To minimize latency, use the same region as the delegate VM.

availability_zone: us-east-2c ## To minimize latency, use the same availability zone as the delegate VM.

access_key_id: XXXXXXXXXXXXXXXXX

access_key_secret: XXXXXXXXXXXXXXXXXXX

key_pair_name: XXXXX

ami: ami-051197ce9cbb023ea

size: t2.nano

iam_profile_arn: arn:aws:iam::XXXX:instance-profile/XXXXX

network:

security_groups:

- sg-XXXXXXXXXXX

- name: windows-ci-pool

default: true

type: amazon

pool: 1

limit: 4

platform:

os: windows

spec:

account:

region: us-east-2 ## To minimize latency, use the same region as the delegate VM.

availability_zone: us-east-2c ## To minimize latency, use the same availability zone as the delegate VM.

access_key_id: XXXXXXXXXXXXXXXXXXXXXX

access_key_secret: XXXXXXXXXXXXXXXXXXXXXX

key_pair_name: XXXXX

ami: ami-088d5094c0da312c0

size: t3.large

hibernate: true

network:

security_groups:

- sg-XXXXXXXXXXXXXX

Pool settings reference

You can configure the following settings in your pool.yml file. You can also learn more in the Drone documentation for the Pool File and Amazon drivers.

| Setting | Type | Example | Description |

|---|---|---|---|

name | String | name: windows_pool | Unique identifier of the pool. You will need to specify this pool name in the Harness Manager when you set up the CI stage build infrastructure. |

pool | Integer | pool: 1 | Warm pool size number. Denotes the number of VMs in ready state to be used by the runner. |

limit | Integer | limit: 3 | Maximum number of VMs the runner can create at any time. pool indicates the number of warm VMs, and the runner can create more VMs on demand up to the limit.For example, assume pool: 3 and limit: 10. If the runner gets a request for 5 VMs, it immediately provisions the 3 warm VMs (from pool) and provisions 2 more, which are not warm and take time to initialize. |

platform | Key-value pairs, strings | Go to platform example. | Specify VM platform operating system (os: linux or os: windows). arch and variant are optional. os_name: amazon-linux is required for AL2 AMIs. The default configuration is os: linux and arch: amd64. |

spec | Key-value pairs, various | Go to Example pool.yml and the examples in the following rows. | Configure settings for the build VMs and AWS instance. Contains a series of individual and mapped settings, including account, tags, ami, size, hibernate, iam_profile_arn, network, and disk. Details about these settings are provided below. |

account | Key-value pairs, strings | Go to account example. | AWS account configuration, including region and access key authentication.

|

tags | Key-vale pairs, strings | Go to tags example. | Optional tags to apply to the instance. |

ami | String | ami: ami-092f63f22143765a3 | The AMI ID. Search for AMIs in your Availability Zone for supported models (Ubuntu, AWS Linux, Windows 2019+). AMI IDs differ by Availability Zone. |

size | String | size: t3.large | The AMI size, such as t2.nano, t2.micro, m4.large, and so on. |

hibernate | Boolean | hibernate: true | When set to true (which is the default), VMs hibernate after startup. When false, VMs are always in a running state. This option is supported for AWS Linux and Windows VMs. Hibernation for Ubuntu VMs is not currently supported. For more information, go to the AWS documentation on hibernating on-demand Linux instances. |

iam_profile_arn | String | iam_profile_arn: arn:aws:iam::XXXX:instance-profile/XXX | If using IAM roles, this is the instance profile ARN of the IAM role to apply to the build instances. |

network | Key-value pairs, various | Go to network example. | AWS network information, including security groups and user data. For more information on these attributes, go to the AWS documentation on creating security groups.

|

disk | Key-value pairs, various | disk: size: 16 type: io1 iops: iops | Optional AWS block information.

|

platform example

instance:

platform:

os: linux

arch: amd64

version:

os_name: amazon-linux

account example

account:

region: us-east-2

availability_zone: us-east-2c

access_key_id: XXXXX

access_key_secret: XXXXX

key_pair_name: XXXXX

tags example

tags:

owner: USER

ttl: '-1'

network example

network:

private_ip: true

subnet_id: subnet-XXXXXXXXXX

security_groups:

- sg-XXXXXXXXXXXXXX

user_data: |

...

Start the runner

SSH into the delegate VM and run the following command to start the runner:

$ docker run -v /runner:/runner -p 3000:3000 drone/drone-runner-aws:latest delegate --pool /runner/pool.yml

This command mounts the volume to the Docker container providing access to pool.yml to authenticate with AWS. It also exposes port 3000 and passes arguments to the container.

What does the runner do

When a build starts, the delegate receives a request for VMs on which to run the build. The delegate forwards the request to the runner, which then allocates VMs from the warm pool (specified by pool in pool.yml) and, if necessary, spins up additional VMs (up to the limit specified in pool.yml).

The runner includes lite engine, and the lite engine process triggers VM startup through a cloud init script. This script downloads and installs Scoop package manager, Git, the Drone plugin, and lite engine on the build VMs. The plugin and lite engine are downloaded from GitHub releases. Scoop is downloaded from get.scoop.sh.

Firewall restrictions can prevent the script from downloading these dependencies. Make sure your images don't have firewall or anti-malware restrictions that are interfering with downloading the dependencies. For more information, go to Troubleshooting.

Install the delegate

Install a Harness Docker Delegate on your delegate VM.

Create a delegate token. The delegate uses this token to authenticate with the Harness Platform.

- In Harness, go to Account Settings, then Account Resources, and then select Delegates.

- Select Tokens in the header, and then select New Token.

- Enter a token name and select Apply to generate a token.

- Copy the token and store is somewhere you can retrieve it when installing the delegate.

Again, go to Account Settings, then Account Resources, and then Delegates.

Select New Delegate.

Select Docker and enter a name for the delegate.

Copy and run the install command generated in Harness. Make sure the

DELEGATE_TOKENmatches the one you just created.

For more information about delegates and delegate installation, go to Delegate installation overview.

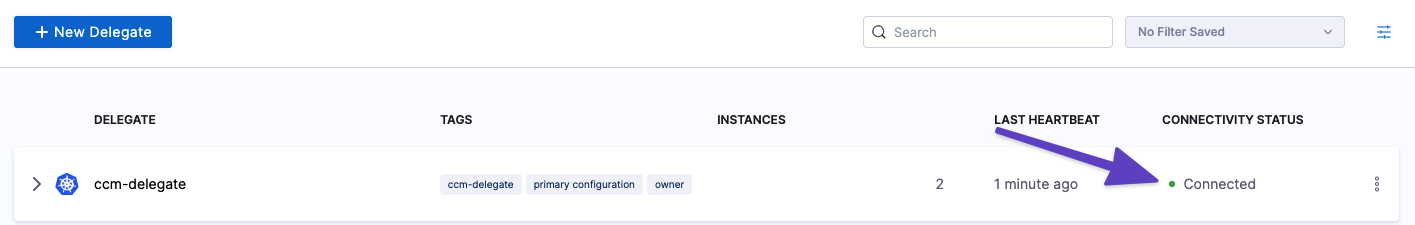

Verify connectivity

Verify that the delegate and runner containers are running correctly. You might need to wait a few minutes for both processes to start. You can run the following commands to check the process status:

$ docker ps

$ docker logs DELEGATE_CONTAINER_ID

$ docker logs RUNNER_CONTAINER_IDIn the Harness UI, verify that the delegate appears in the delegates list. It might take two or three minutes for the Delegates list to update. Make sure the Connectivity Status is Connected. If the Connectivity Status is Not Connected, make sure the Docker host can connect to

https://app.harness.io.

The delegate and runner are now installed, registered, and connected.

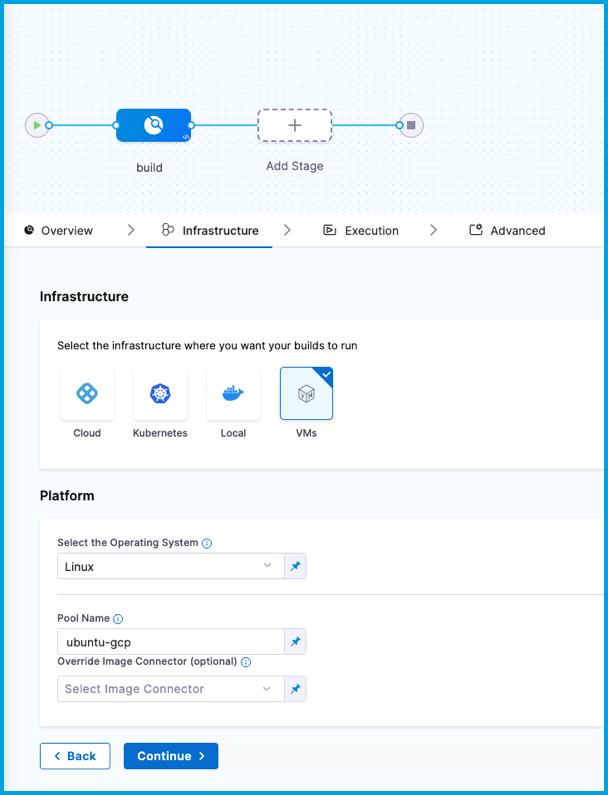

Specify build infrastructure

Configure your pipeline's Build (CI) stage to use your AWS VMs as build infrastructure.

- Visual

- YAML

- In Harness, go to the CI pipeline that you want to use the AWS VM build infrastructure.

- Select the Build stage, and then select the Infrastructure tab.

- Select VMs.

- Enter the Pool Name from your pool.yml.

- Save the pipeline.

- stage:

name: build

identifier: build

description: ""

type: CI

spec:

cloneCodebase: true

infrastructure:

type: VM

spec:

type: Pool

spec:

poolName: POOL_NAME_FROM_POOL_YML

os: Linux

execution:

steps:

...

Troubleshooting

Build VM creation fails with no default VPC

When you run the pipeline, if VM creation in the runner fails with the error no default VPC, then you need to set subnet_id in pool.yml.

CI builds stuck at the initialize step on health check

If your CI build gets stuck at the initialize step on the health check for connectivity with lite engine, either lite engine is not running on your build VMs or there is a connectivity issue between the runner and lite engine.

- Verify that lite-engine is running on your build VMs.

- SSH/RDP into a VM from your VM pool that is in a running state.

- Check whether the lite-engine process is running on the VM.

- Check the cloud init output logs to debug issues related to startup of the lite engine process. The lite engine process starts at VM startup through a cloud init script.

- If lite-engine is running, verify that the runner can communicate with lite-engine from the delegate VM.

- Run

nc -vz <build-vm-ip> 9079from the runner. - If the status is not successful, make sure the security group settings in

runner/pool.ymlare correct, and make sure your security group setup in AWS allows the runner to communicate with the build VMs. - Make sure there are no firewall or anti-malware restrictions on your AMI that are interfering with the cloud init script's ability to download necessary dependencies. For details about these dependencies, go to What does the runner do.

- Run

Delegate connected but builds fail

If the delegate is connected but your builds are failing, check the following:

- Make sure your the AMIs, specified in

pool.yml, are still available.- Amazon reprovisions their AMIs every two months.

- For a Windows pool, search for an AMI called

Microsoft Windows Server 2019 Base with Containersand updateamiinpool.yml.

- Confirm your security group setup and security group settings in

runner/pool.yml.

Using internal or custom AMIs

If you are using an internal or custom AMI, make sure it has Docker installed.

Additionally, make sure there are no firewall or anti-malware restrictions interfering with initialization, as described in CI builds stuck at the initialize step on health check.

Logs

- Linux

- Lite engine logs:

/var/log/lite-engine.log - Cloud init output logs:

/var/log/cloud-init-output.log

- Lite engine logs:

- Windows

- Lite engine logs:

C:\Program Files\lite-engine\log.out - Cloud init output logs:

C:\ProgramData\Amazon\EC2-Windows\Launch\Log\UserdataExecution.log

- Lite engine logs: