Set up a Kubernetes cluster build infrastructure

This topic describes how you can use a Kubernetes cluster build infrastructure for the Build stage in a Harness CI pipeline.

This topic assumes you have a basic understanding of Harness' key concepts.

Important notes

Review the following important information about using Kubernetes cluster build infrastructures for your Harness CI builds.

The Kubernetes cluster build infrastructure option is only available with Harness CI Team and Enterprise plans.

Privileged mode is required for Docker-in-Docker

If your build process needs to run Docker commands, Docker-in-Docker (DinD) with privileged mode is necessary when using a Kubernetes cluster build infrastructure.

If your Kubernetes cluster doesn't support privileged mode, you'll need to use another build infrastructure, such as Harness Cloud or a VM build infrastructure. Other infrastructure types allow you to run Docker commands directly on the host.

GKE Autopilot is not recommended

We don't recommend using Harness CI with GKE Autopilot due to Docker-in-Docker limitations and potential cloud cost increases.

Autopilot clusters do not allow privileged pods, which means you can't use Docker-in-Docker to run Docker commands, since these require privileged mode.

Additionally, GKE Autopilot sets resource limits equal to resource requests for each container. This can cause your builds to allocate more resources than they need, resulting in higher cloud costs with no added benefit.

GKE Autopilot cloud cost demonstration

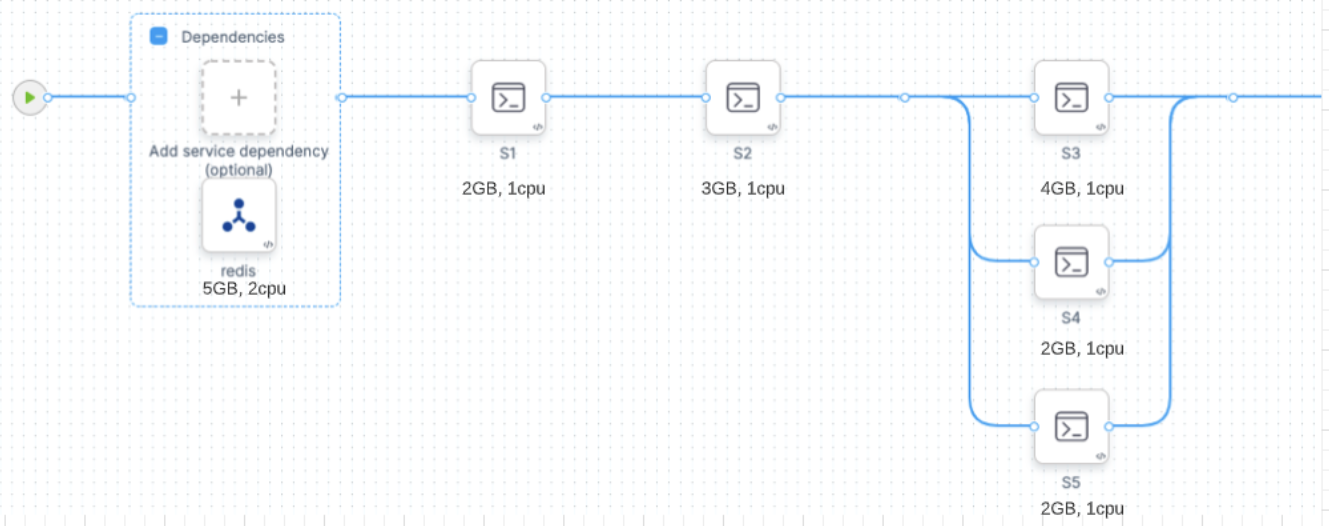

Consider the following CI stage:

Assume that you configure your stage resources as follows:

- Redis (service dependency in Background step): 5GB, 2 CPU

- s1 step: 2GB, 2 CPU

- s2 step: 3GB, 1 CPU

- s3 step: 4GB, 1 CPU

- s4 step: 2GB, 1 CPU

- s5 step: 2GB, 1 CPU

Kubernetes would allocate a pod based on the maximum requirements for the overall stage. In this example, the peak requirement is when the s3, s4, and s5 steps run in parallel. The pod also needs to run the Redis service at the same time. The total maximum requirements are the sum of Redis + s3 + s4 + s5:

- 5 + 4 + 2 + 2 = 13GB Memory

- 2 + 1 + 1 + 1 = 5 CPUs

GKE Autopilot calculates resource requirements differently. For containers, it sets resource limits equivalent to resource requests. For pods, it sums all step requirements in the stage, whether they're running in parallel or not. In this example, the total maximum requirements are the sum of Redis + s1 + s2 + s3 + s4 + s5:

- 5 + 2 + 2+ 4 + 4 + 4 = 17GB Memory

- 2 + 1 + 1+ 1 + 1 + 1 = 7 CPUs

Autopilot might be cheaper than standard Kubernetes if you only run builds occasionally. This can result in cost savings because some worker nodes are always running in a standard Kubernetes cluster. If you're running builds more often, Autopilot can increase costs unnecessarily.

Windows builds

Go to Run Windows builds in a Kubernetes cluster build infrastructure.

Self-signed certificates

Go to Configure a Kuberneted build farm to use self-signed certificates.

Process overview

After you set up the Kubernetes cluster that you want to use as your build infrastructure, you use a Harness Kubernetes Cluster connector and Harness Delegate to create a connection between Harness and your cluster.

Here's a short video that walks you through adding a Harness Kubernetes Cluster connector and Harness Kubernetes delegate. The delegate is added to the target cluster, then the Kubernetes Cluster connector uses the delegate to connect to the cluster.

Follow the steps below to set up your Kubernetes cluster build infrastructure. For a tutorial walkthrough, try the Build and test on a Kubernetes cluster build infrastructure tutorial.

Step 1: Create a Kubernetes cluster

Make sure your Kubernetes cluster meets the build infrastructure requirements in the CI cluster requirements and the Harness-specific permissions required for CI.

You need to install the Harness Kubernetes Delegate on the same cluster you use as your build infrastructure. Make sure that the cluster has enough memory and CPU for the Delegate you are installing. Harness Kubernetes Delegates can be in a different namespace than the one you provide while defining the build farm infrastructure for the CI pipeline.

For instructions on creating clusters, go to:

Step 2: Add the Kubernetes Cluster connector and install the Delegate

In your Harness Project, select Connectors under Project Setup.

Select New Connector, and then select Kubernetes cluster.

Enter a name for the connector and select Continue.

Select Use the credentials of a specific Harness Delegate, and then select Continue.

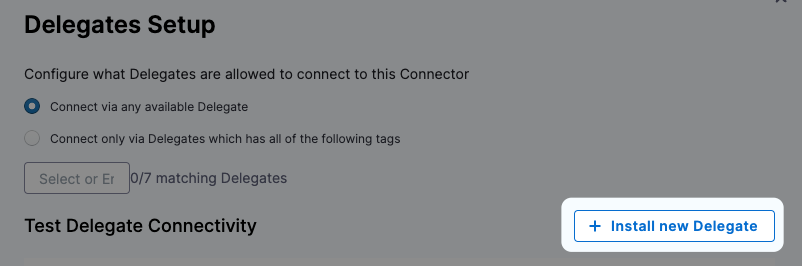

Select Install new Delegate.

Install the Delegate on a pod in your Kubernetes build infrastructure. You can use a Helm Chart, Terraform, or Kubernetes Manifest to install Kubernetes delegates. For details and instructions for each of these options, go to Delegate installation overview.

After installing the delegate, return to the Harness UI and select Verify to test the connection. It might take a few minutes to verify the Delegate. Once it is verified, exit delegate creation and return to connector setup.

In your Kubernetes Cluster connector's Delegates Setup, select Only use Delegates with all of the following tags.

Select your new Kubernetes delegate, and then select Save and Continue.

Wait while Harness tests the connection, and then select Finish.

Although you must select a specific delegate when you create the Kubernetes Cluster connector, you can choose to use a different delegate for executions and cleanups in individual pipelines or stages. To do this, use pipeline-level delegate selectors or stage-level delegate selectors.

Delegate selections take precedence in the following order:

- Stage

- Pipeline

- Connector

This means that if delegate selectors are present at the pipeline and stage levels, then these selections override the delegate selected in the Kubernetes cluster connector's configuration. If a stage has a stage-level delegate selector, then it uses that delegate. Stages that don't have stage-level delegate selectors use the pipeline-level selector, if present, or the connector's delegate.

For example, assume you have a pipeline with three stages called alpha, beta, and gamma. If you specify a stage-level delegate selector on alpha and you don't specify a pipeline-level delegate selector, then alpha uses the stage-level delegate, and the other stages (beta and gamma) use the Connector delegate.

Step 3: Define the Build Farm Infrastructure in Harness

In the Build stage's Infrastructure tab, select the Kubernetes cluster connector you created previously.

In Namespace, enter the Kubernetes namespace to use. You can also use a Runtime Input (<+input>) or expression for the namespace. For more information, go to Runtime Inputs.

You may need to configure the settings described below, as well as other advanced settings described in CI Build stage settings. Review the details of each setting to understand whether it is required for your configuration.

Service Account Name

Specify a Kubernetes service account that you want step containers to use when communicating with the Kubernetes API server. Leave this field blank if you want to use the namespace's default service account. You must set this field in the following cases:

- Your build infrastructure runs on EKS, you have an IAM role associated with the service account, and the stage has a step that uses a Harness AWS connector with IRSA. For more information, go to the AWS documentation on IAM Roles for Service Accounts.

- Your Build stage has steps that communicate with any external services using a service account other than the default. For more information, go to the Kubernetes documentation on Configure Service Accounts for Pods.

- Your Kubernetes cluster connector inherits authentication credentials from the Delegate.

Run as User or Run as Non-Root

Use the Run as Non-Root and Run as User settings to override the default Linux user ID for containers running in the build infrastructure. This is useful if your organization requires containers to run as a specific user with a specific set of permissions.

Using a non-root user can require other changes to your pipeline.

With a Kubernetes cluster build infrastructure, all Build and Push steps use kaniko. This tool requires root access to build the Docker image. It doesn't support non-root users.

If you enable Run as Non-Root, then you must:

- Run the Build and Push step as root by setting Run as User to

0on the Build and Push step. This will use the root user for that individual step only. - If your security policy doesn't allow running as root for any step, you must use the Buildah Drone plugin to build and push with non-root users.

- Run as Non-Root: Enable this option to run all steps as a non-root user. If enabled, you must specify a default user ID for all containers in the Run as User field.

- Run as User: Specify a user ID, such as

1000, to use for all containers in the pod. You can also set Run as User values for individual steps. If you set Run as User on a step, it overrides the build infrastructure Run as User setting.

For more information, go to Configure a security context for a Pod in the Kubernetes docs.

Init Timeout

If you use large images in your Build stage's steps, you might find that the initialization step times out and the build fails when the pipeline runs. In this case, you can increase the init timeout from the default of 8 minutes.

Annotations

You can add Kubernetes annotations to the pods in your infrastructure. An annotation can be small or large, structured or unstructured, and can include characters not permitted by labels. For more information, go to the Kubernetes documentation on Annotations.

Labels

You can add Kubernetes labels, as key-value pairs, to the pods in your infrastructure. Labels are useful for searching, organizing, and selecting objects with shared metadata. You can find pods associated with specific stages, organizations, projects, pipelines, builds, or any custom labels you want to query, for example:

kubectl get pods -l stageID=mycibuildstage

For more information, go to the Kubernetes documentation on Labels and Selectors.

Custom label values must the following regex in order to be generated:

^[a-z0-9A-Z][a-z0-9A-Z\\-_.]*[a-z0-9A-Z]$

Harness adds the following labels automatically:

stageID: Seepipeline.stages.stage.identifierin the Pipeline YAML.stageName: Seepipeline.stages.stage.namein the Pipeline YAML.orgID: Seepipeline.orgIdentifierin the Pipeline YAML.projectID: Seepipeline.projectIdentifierin the Pipeline YAML.pipelineID: Seepipeline.identifierin the Pipeline YAML.pipelineExecutionId: To find this, go to a CI Build in the Harness UI. ThepipelineExecutionIDis near the end of the URL path, betweenexecutionsand/pipeline, for example:

https://app.harness.io/ng/#/account/myaccount/ci/orgs/myusername/projects/myproject/pipelines/mypipeline/executions/__PIPELINE_EXECUTION-ID__/pipeline

YAML example

Here's a YAML example of a stage configured to use a Kubernetes cluster build infrastructure.

stages:

- stage:

name: Build Test and Push

identifier: Build_Test_and_Push

description: ""

type: CI

spec:

cloneCodebase: true

infrastructure:

type: KubernetesDirect

spec:

connectorRef: account.harnessk8s

namespace: harness-delegate

automountServiceAccountToken: true

nodeSelector: {}

os: Linux

Troubleshooting

For Kubernetes cluster build infrastructure troubleshooting guidance go to: