CD artifact sources

In DevOps, an artifact source is a location where the compiled, tested, and ready-to-deploy software artifacts are stored. These artifacts could be container images, compiled binary files, executables, or any other software components that are part of the application.

To add an artifact source, you add a Harness connector to the artifact platform (DockerHub, GCR, Artifactory, etc.) and then add an artifact source to a Harness service that defines the artifact source name, path, tags, and so on.

This topic lists the artifact sources you can use in your Harness services.

Using artifacts in manifests

You have two options when referencing the artifacts you want to deploy:

- Add an artifact source to the Harness service and reference it using the Harness expression

<+artifacts.primary.image>in the values YAML file. - Hardcode the artifact into the manifests or values YAML file.

Use the artifact expression

Add the image location to Harness as an artifact in the Artifacts section of the service.

This allows you to reference the image in your values YAML files using the Harness expression <+artifacts.primary.image>.

...

image: <+artifacts.primary.image>

...

You cannot use Harness variables expressions in your Kubernetes object manifest files. You can only use Harness variables expressions in values YAML files, or Kustomize Patch file.

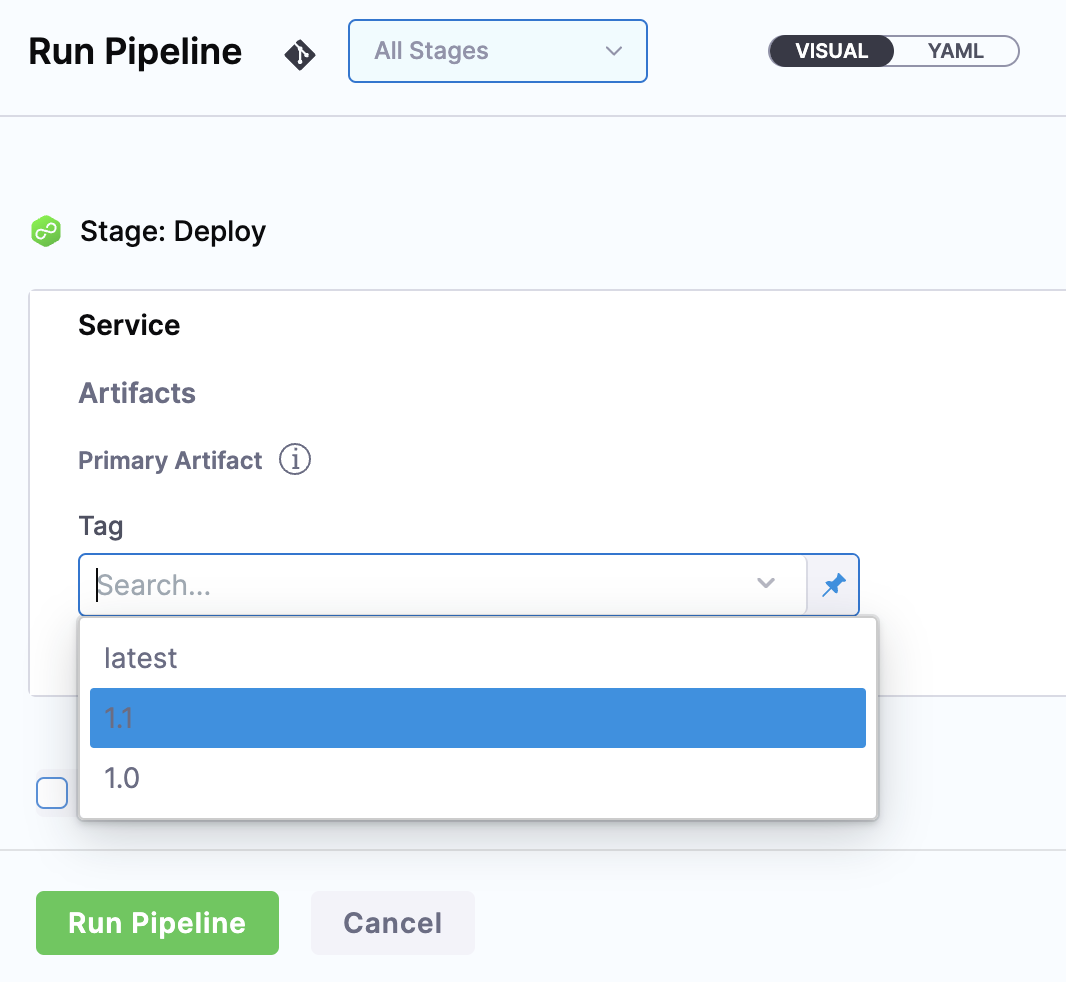

When you select the artifact repo for the artifact, like a Docker Hub repo, you specify the artifact and tag/version to use.

You can select a specific tag/version, use a runtime input so that you are prompted for the tag/version when you run the pipeline, or you can use an Harness variable expression to pass in the tag/version at execution.

Here's an example where a runtime input is used and you select which image version/tag to deploy.

With a Harness artifact, you can template your manifests, detaching them from a hardcoded location. This makes your manifests reusable and dynamic.

Hardcode the artifact

If a Docker image location is hardcoded in your Kubernetes manifest (for example, image: nginx:1.14.2), then you can simply add the manifest to Harness in Manifests and Kubernetes will pull the image during deployment.

When you hardcode the artifact in your manifests, any artifacts added to your Harness service are ignored.

Docker

Use artifacts in any Docker registry

- YAML

- API

- Terraform Provider

- Harness Manager

To use a Docker artifact, you create or use a Harness connector to connect to your Docker repo and then use that connector in your Harness service and reference the artifact to use.

Docker connector YAML

connector:

name: Docker Hub with Pwd

identifier: Docker_Hub_with_Pwd

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: DockerRegistry

spec:

dockerRegistryUrl: https://index.docker.io/v2/

providerType: DockerHub

auth:

type: UsernamePassword

spec:

username: johndoe

passwordRef: Docker_Hub_Pwd

executeOnDelegate: false

Service using Docker artifact YAML

service:

name: Example K8s2

identifier: Example_K8s2

serviceDefinition:

type: Kubernetes

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates/deployment.yaml

valuesPaths:

- /values.yaml

skipResourceVersioning: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: Docker_Hub_with_Pwd

imagePath: library/nginx

tag: stable-perl

identifier: myimage

type: DockerRegistry

gitOpsEnabled: false

Create the Docker connector using the Create a Connector API.

Docker connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=123456' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.123456.123456' \

--data-raw 'connector:

name: dockerhub

identifier: dockerhub

description: ""

tags: {}

orgIdentifier: default

projectIdentifier: APISample

type: DockerRegistry

spec:

dockerRegistryUrl: https://index.docker.io/v2/

providerType: DockerHub

auth:

type: Anonymous'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider Docker connector resource, go to harness_platform_connector_docker.

Docker connector example

# credentials anonymous

resource "harness_platform_connector_docker" "test" {

identifier = "identifer"

name = "name"

description = "test"

tags = ["foo:bar"]

type = "DockerHub"

url = "https://hub.docker.com"

delegate_selectors = ["harness-delegate"]

}

# credentials username password

resource "harness_platform_connector_docker" "test" {

identifier = "identifer"

name = "name"

description = "test"

tags = ["foo:bar"]

type = "DockerHub"

url = "https://hub.docker.com"

delegate_selectors = ["harness-delegate"]

credentials {

username = "admin"

password_ref = "account.secret_id"

}

}

For the Terraform Provider service resource, go to harness_platform_service.

Service example

resource "harness_platform_service" "example" {

identifier = "identifier"

name = "name"

description = "test"

org_id = "org_id"

project_id = "project_id"

## SERVICE V2 UPDATE

## We now take in a YAML that can define the service definition for a given Service

## It isn't mandatory for Service creation

## It is mandatory for Service use in a pipeline

yaml = <<-EOT

service:

name: Example K8s2

identifier: Example_K8s2

serviceDefinition:

type: Kubernetes

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates/deployment.yaml

valuesPaths:

- /values.yaml

skipResourceVersioning: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: Docker_Hub_with_Pwd

imagePath: library/nginx

tag: stable-perl

identifier: myimage

type: DockerRegistry

gitOpsEnabled: false

EOT

}

To add an artifact from a Docker registry, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select Kubernetes.

In Artifacts, select Add Artifact Source.

In Select Artifact Repository Type, select the registry where your Docker artifact is hosted. For this example, we'll select Docker Registry, and then click Continue.

Select or create a Docker Registry Connector.

Select Continue.

In Artifact Source Name, enter a name that identifies your artifact.

In Image path, enter the name of the artifact you want to deploy, such as

library/nginxorjsmtih/privateimage.Official images in public repos often need the label

library, e.g.library/tomcat.Wildcards are not supported.

In Tag, enter or select the Docker image tag for the image.

To specify an image digest, use Digest and the unique identifier for the image you want to use. Specifying an image by tag and digest (rather than tag alone) is useful when you want to deploy an image with a fixed digest/SHA for your service.

noteThis option is behind the feature flag

CD_NG_DOCKER_ARTIFACT_DIGEST. Contact Harness Support to enable the feature.If an image with the specified tag/digest combination does not exist in the artifact registry, the pipeline will fail.

Select Submit. The Artifact is added to the Service Definition.

Important notes

- For pulling Docker images from Docker repos, Harness is restricted by the limits of the Docker repo. For example, Docker Hub limits.

- The maximum number of artifact image tags fetched by Harness that is 10000.

Google Container Registry (GCR)

Use GCR artifacts

You connect to GCR using a Harness GCP Connector. For details on all the GCR requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Harness Manager

To use a GCR artifact, you create or use a Harness GCP Connector to connect to GCR repo and then use that connector in your Harness service and reference the artifact to use.

GCP connector YAML

This example uses a Harness delegate installed in GCP for credentials.

connector:

name: GCR

identifier: GCR

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Gcp

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: true

Service using GCR artifact YAML

service:

name: Google Artifact

identifier: Google_Artifact

serviceDefinition:

type: Kubernetes

spec:

manifests:

- manifest:

identifier: manifests

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- account:/Templates

valuesPaths:

- account:/values.yaml

skipResourceVersioning: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: GCR

imagePath: docs-play/todolist-sample

tag: <+input>

registryHostname: gcr.io

identifier: myapp

type: Gcr

gitOpsEnabled: false

Create the GCR connector using the Create a Connector API.

GCR connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: GCRexample

identifier: GCRexample

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Gcp

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: true'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider GCP connector resource, go to harness_platform_connector_gcp.

GCP connector example

# Credential manual

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

manual {

secret_key_ref = "account.secret_id"

delegate_selectors = ["harness-delegate"]

}

}

# Credentials inherit_from_delegate

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

inherit_from_delegate {

delegate_selectors = ["harness-delegate"]

}

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to GCR using a Harness GCP Connector. For details on all the GCR requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

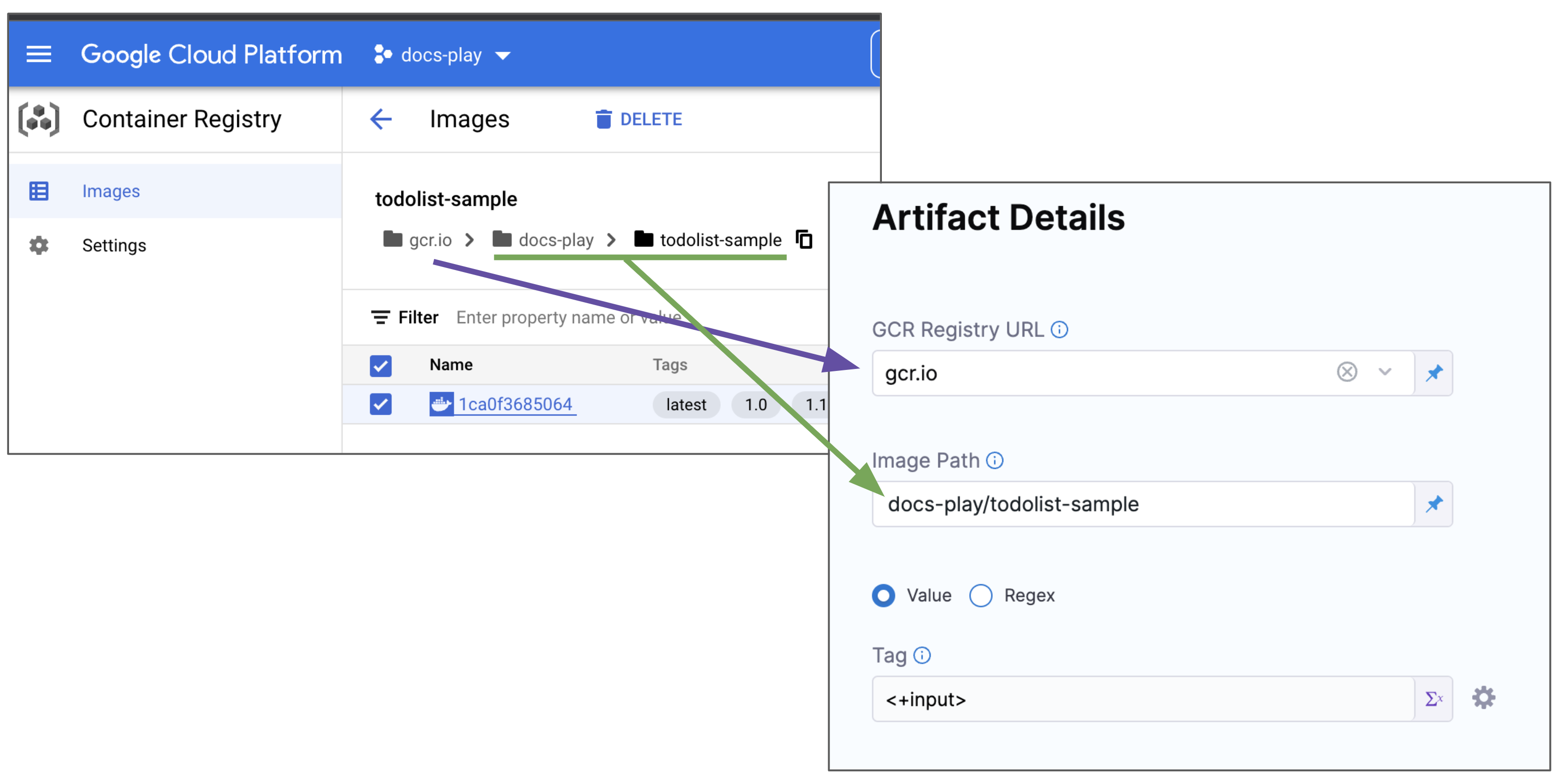

To add an artifact from GCR, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select Kubernetes.

In Artifacts, select Add Artifact Source.

In Select Artifact Repository Type, click GCR, and then click Continue.

In GCR Repository, select or create a Google Cloud Platform (GCP) Connector that connects to the GCP account where the GCR registry is located.

Click Continue.

In Artifact Source Name, enter a name for the artifact.

In GCR Registry URL, select the GCR registry host name, for example

gcr.io.In Image Path, enter the name of the artifact you want to deploy.

Images in repos need to reference a path starting with the project Id that the artifact is in, for example:

myproject-id/image-name.In Tag, enter or select the Docker image tag for the image or select a runtime input or expression.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

To specify an image digest, use Digest and the unique identifier for the image you want to use. Specifying an image by tag and digest (rather than tag alone) is useful when you want to deploy an image with a fixed digest/SHA for your service.

noteThis option is behind the feature flag

CD_NG_DOCKER_ARTIFACT_DIGEST. Contact Harness Support to enable the feature.If an image with the specified tag/digest combination does not exist in the artifact registry, the pipeline will fail.

Select Submit.

The Artifact is added to the Service Definition.

Permissions

For Google Container Registry (GCR), the following roles are required:

- Storage Object Viewer (roles/storage.objectViewer)

- Storage Object Admin (roles/storage.objectAdmin)

For more information, go to the GCP documentation about Cloud IAM roles for Cloud Storage.

Ensure the Harness delegate you have installed can reach storage.cloud.google.com and your GCR registry host name, for example gcr.io.

Use Docker Registry for GCR

If you do not want to use the GCP connector for GCR, you can use the platform-agnostic Docker Registry connector.

Use the following settings:

- Provider Type: select Other (Docker V2 compliant).

- URL: Enter the GCR URL for your GCP account.

- Example:

https://gcr.io/my-account.

- Example:

- Authentication:

- Username: Enter

_token.- The usage of

_tokenas a username for GCP authentication typically occurs when using certain command-line utilities or API clients that require an access token instead of a traditional username and password.

- The usage of

- Password: Enter the output of the

gcloud auth print-access-tokencommand using a Harness secret.

- Username: Enter

Ensure that the GCP IAM user you use has the correct permissions for pulling from GCR.

Permissions

For Google Container Registry (GCR), the following roles are required:

- Storage Object Viewer (roles/storage.objectViewer)

- Storage Object Admin (roles/storage.objectAdmin)

For more information, go to the GCP documentation about Cloud IAM roles for Cloud Storage.

Ensure the Harness delegate you have installed can reach storage.cloud.google.com and your GCR registry host name, for example gcr.io.

Google Cloud Storage (GCS)

Use GCS artifacts

You connect to GCS using a Harness GCP Connector. For details on all the GCS requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Harness Manager

To use a GCS artifact, you create or use a Harness GCP Connector to connect to GCS bucket and then use that connector in your Harness service and reference the artifact to use.

GCP connector YAML

This example uses a Harness delegate installed in GCP for credentials.

connector:

name: GCS

identifier: GCS

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type:

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: true

Service using GCS artifact YAML

service:

name: GCS

identifier: GCS

tags: {}

serviceDefinition:

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: GCP_Connector

project: myapp

bucket: functions

artifactPath: myfunction.zip

identifier: myfunction

type: GoogleCloudStorage

manifests:

- manifest:

identifier: myfunction

type: GoogleCloudFunctionDefinition

spec:

store:

type: Harness

spec:

files:

- /google-cloud-function/myfunction

type: GoogleCloudFunctions

Create the GCP connector using the Create a Connector API.

GCP connector example

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: GCXexample

identifier: GCSexample

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Gcp

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: true'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider GCP connector resource, go to harness_platform_connector_gcp.

GCP connector example

# Credential manual

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

manual {

secret_key_ref = "account.secret_id"

delegate_selectors = ["harness-delegate"]

}

}

# Credentials inherit_from_delegate

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

inherit_from_delegate {

delegate_selectors = ["harness-delegate"]

}

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to GCS using a Harness GCP Connector. For details on all the GCS requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

To add an artifact from GCS, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select Google Cloud Functions.

In Artifacts, select Add Artifact Source.

In Select Artifact Repository Type, click Google Cloud Storage, and then click Continue.

In Google Cloud Storage Repository, select or create a Google Cloud Platform (GCP) Connector that connects to the GCP account where the GCS bucket is located.

Click Continue.

In Project, select the GCP project where the bucket is located.

In Bucket, select the GCS bucket.

In Artifact Path, select the name of the artifact you want to deploy.

Click Submit.

The Artifact is added to the Service Definition.

Permissions

For Google Cloud Storage (GCS), the following roles are required:

- Storage Object Viewer (roles/storage.objectViewer)

- Storage Object Admin (roles/storage.objectAdmin)

For more information, go to the GCP documentation about Cloud IAM roles for Cloud Storage.

Google Artifact Registry

Use Google Artifact Registry artifacts

You connect to Google Artifact Registry using a Harness GCP Connector.

For details on all the Google Artifact Registry requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Harness Manager

This example uses a Harness delegate installed in GCP for credentials.

Google Artifact Registry connector YAML

connector:

name: Google Artifact Registry

identifier: Google_Artifact_Registry

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Gcp

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: true

Service using Google Artifact Registry artifact YAML

service:

name: Google Artifact Registry

identifier: Google_Artifact_Registry

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- identifier: myapp

spec:

connectorRef: Google_Artifact_Registry

repositoryType: docker

project: docs-play

region: us-central1

repositoryName: quickstart-docker-repo

package: quickstart-docker-repo

version: <+input>

type: GoogleArtifactRegistry

type: Kubernetes

Create the Google Artifact Registry connector using the Create a Connector API.

GCR connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: Google Artifact Registry

identifier: Google_Artifact_Registry

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Gcp

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: true'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider GCP connector resource, go to harness_platform_connector_gcp.

GCP connector example

# Credential manual

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

manual {

secret_key_ref = "account.secret_id"

delegate_selectors = ["harness-delegate"]

}

}

# Credentials inherit_from_delegate

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

inherit_from_delegate {

delegate_selectors = ["harness-delegate"]

}

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to Google Artifact Registry using a Harness GCP Connector.

For details on all the Google Artifact Registry requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

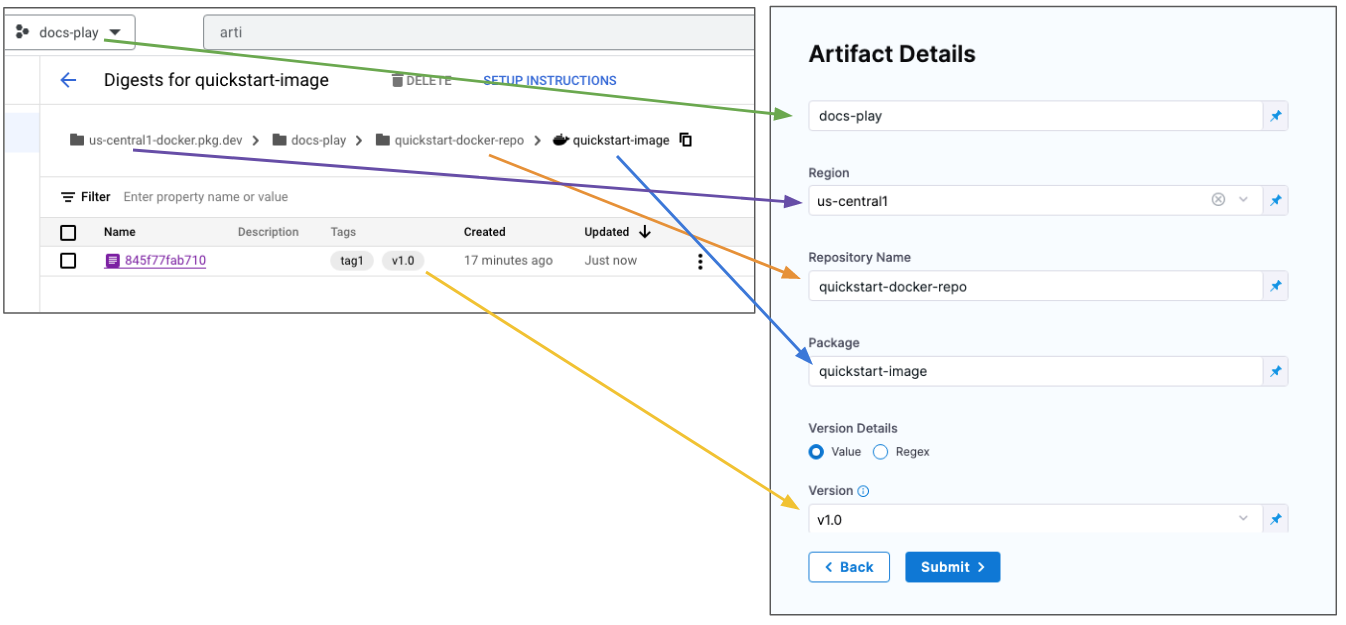

To add an artifact from Google Artifact Registry, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select Kubernetes.

In Artifacts, select Add Artifact Source.

In Artifact Repository Type, select Google Artifact Registry, and then select Continue.

In GCP Connector, select or create a Google Cloud Platform (GCP) Connector that connects to the GCP account where the Google Artifact Registry is located.

Select Continue.

In Artifact Details, you are basically creating the pull command. For example:

docker pull us-central1-docker.pkg.dev/docs-play/quickstart-docker-repo/quickstart-image:v1.0In Artifact Source Name, enter a name for the artifact.

In Repository Type, select the format of the artifact.

In Project, enter the Id of the GCP project.

In Region, select the region where the repo is located.

In Repository Name, enter the name of the repo.

In Package, enter the artifact name.

In Version Details, select Value or Regex.

In Version, enter or select the Docker image tag.

:::note

If you used Fixed Value in Version and Harness is not able to fetch the image tags, ensure that the GCP service account key used in the GCP connector credentials, or in the service account used to install the Harness delegate, has the required permissions. See the Permissions tab in this documentation.

:::

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

To specify an image digest, use Digest and the unique identifier for the image you want to use. Specifying an image by tag and digest (rather than tag alone) is useful when you want to deploy an image with a fixed digest/SHA for your service.

noteThis option is behind the feature flag

CD_NG_DOCKER_ARTIFACT_DIGEST. Contact Harness Support to enable the feature.If an image with the specified tag/digest combination does not exist in the artifact registry, the pipeline will fail.

Select Submit. The Artifact is added to the Service Definition.

Permissions

For Google Artifact Registry, the following roles are required:

- Artifact Registry Reader

- Artifact Registry Writer

For more information, go to the GCP documentation Configure roles and permissions.

Ensure the Harness delegate you have installed can reach your Google Artifact Registry region, for example us-central1.

Azure DevOps Artifacts

Use Azure Artifacts

You connect to your Azure DevOps artifacts using a Harness Azure Artifacts connector.

- YAML

- API

- Harness Manager

Azure Artifacts connector YAML

connector:

name: Azure Artifacts

identifier: Azure_Artifacts

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: AzureArtifacts

spec:

azureArtifactsUrl: https://dev.azure.com/garvit-test

auth:

spec:

type: PersonalAccessToken

spec:

tokenRef: azureartifactspat

delegateSelectors:

- gcpdocplay

executeOnDelegate: true

Service using Azure Artifacts artifact YAML

service:

name: Azure Artifacts

identifier: Azure_Artifacts

tags: {}

serviceDefinition:

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- identifier: mypackage

spec:

connectorRef: Azure_Artifacts

scope: org

feed: garvit-test

packageType: maven

package: com.mycompany.app:my-app

version: 1.1-SNAPSHOT

type: AzureArtifacts

type: Ssh

Azure Artifact connector example

Create the Azure Artifact connector using the Create a Connector API.

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: Azure Artifacts

identifier: Azure_Artifacts

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: AzureArtifacts

spec:

azureArtifactsUrl: https://dev.azure.com/garvit-test

auth:

spec:

type: PersonalAccessToken

spec:

tokenRef: azureartifactspat

delegateSelectors:

- gcpdocplay

executeOnDelegate: true'

Create a service with an artifact source that uses the connector using the Create Services API.

Azure Artifact connector

- In your Harness project, in Connectors, select New Connector, and then select Azure Artifacts.

- Enter a name for the connector, and select Continue.

- In Azure Artifacts URL, enter the Azure DevOps organization URL, for example,

https://dev.azure.com/my-org. - In Personal Access Token, enter a PAT token for the Azure DevOps organization, and select Continue.

- In Delegates Setup, select a delegate that has network connectivity to the Azure Cloud.

- Save the connector.

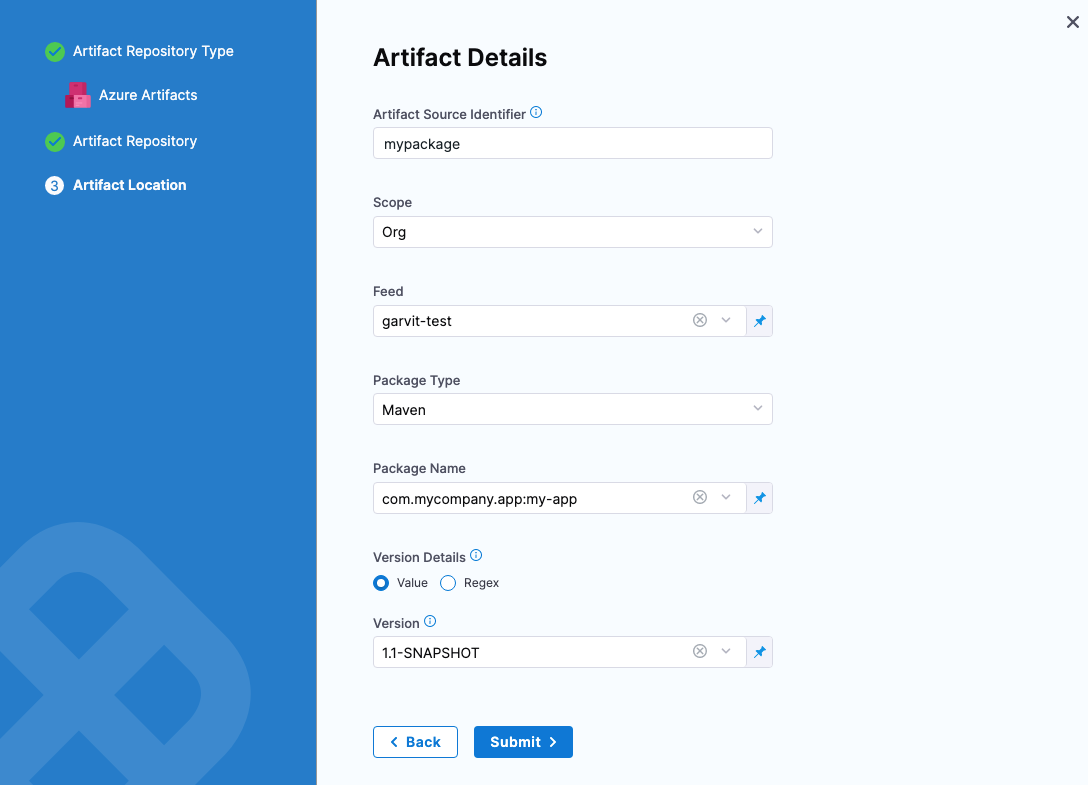

Add an Azure Artifact artifact

- In a Harness service, select Configuration.

- In Deployment Type, select one of the supported deployment types.

- In Artifacts, select Add Artifact Source.

- In Specify Artifact Repository Type, select Azure Artifacts, and select Continue.

- In Azure Artifacts Repository, select or create an Azure Artifacts connector that connects to your Azure DevOps organization, and then select Continue.

- In Artifact Details, enter the following:

- Artifact Source Identifier: Enter the name for the artifact in Harness.

- Scope: Select Org or Project.

- Feed: Select the artifact feed.

- Package Name: Select the name of the package on Azure Artifacts.

- Version: Select the artifact version to use or set the option as a runtime input or expression.

- Select Submit.

Deployment type support

Azure DevOps Artifacts are can be used with the following Harness deployment types:

- SSH

- WinRM

- Azure Web Apps

- Tanzu

Package type support

Harness supports Maven and Nuget package types.

Harness also supports universal packages, which enable developers to store an extensive array of package types that extend beyond the conventional ones. Note the following:

Currently support is limited to traditional VM deployments using SSH or WinRM.

Artifact source templates are not currently supported for universal packages.

Here's an example definition of a universal package in a Harness service definition:

service:

name: azure

identifier: azure

tags: {}

serviceDefinition:

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- identifier: azure

spec:

connectorRef: azure

scope: org

feed: universaltest68137

packageType: upack

package: my-first-package

version: 0.0.1

type: AzureArtifacts

type: AzureWebApp

Azure DevOps URL

This is the URL in your browser when you are in the Azure DevOps organization containing the projects and feed(s) you want to use.

For example, in this URL, https://dev.azure.com/garvit-test/sample-project/_packaging?_a=feed&feed=other-feed, you only need to use https://dev.azure.com/garvit-test in Harness connector Azure DevOps URL setting.

Permissions

You use an Azure DevOps Personal Access Token (PAT) to authenticate with Azure.

Create a Personal Access token as described in Authenticate access with personal access tokens from Azure.

Next, copy the token and paste it in the Harness Encrypted Text secret you use for the PAT in the Harness Azure Artifacts connector.

The PAT must have the Read permission in Packaging.

Azure Container Registry (ACR)

Use ACR artifacts

You connect to ACR using a Harness Azure Connector. For details on all the Azure requirements for the Azure Connector, see Add a Microsoft Azure cloud connector.

- YAML

- API

- Terraform Provider

- Harness Manager

This example uses a Harness delegate installed in Azure for credentials.

Azure connector for ACR YAML

connector:

name: ACR-docs

identifier: ACRdocs

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Azure

spec:

credential:

type: ManualConfig

spec:

applicationId: xxxxx-xxxx-xxxx-xxxx-xxxxx

tenantId: xxxxx-xxxx-xxxx-xxxx-xxxxx

auth:

type: Secret

spec:

secretRef: acrvalue

azureEnvironmentType: AZURE

executeOnDelegate: false

Service using ACR artifact YAML

service:

name: Azure with ACR

identifier: Azure

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: ACRdocs

tag: <+input>

subscriptionId: <+input>

registry: <+input>

repository: <+input>

identifier: myapp

type: Acr

type: Kubernetes

Create the ACR connector using the Create a Connector API.

ACR connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: ACR-docs

identifier: ACRdocs

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Azure

spec:

credential:

type: ManualConfig

spec:

applicationId: xxxxx-xxxx-xxxx-xxxx-xxxxx

tenantId: xxxxx-xxxx-xxxx-xxxx-xxxxx

auth:

type: Secret

spec:

secretRef: acrvalue

azureEnvironmentType: AZURE

executeOnDelegate: false'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider ACR connector resource, go to harness_platform_connector_azure_cloud_provider.

ACR connector example

resource "harness_platform_connector_azure_cloud_provider" "manual_config_secret" {

identifier = "identifier"

name = "name"

description = "example"

tags = ["foo:bar"]

credentials {

type = "ManualConfig"

azure_manual_details {

application_id = "application_id"

tenant_id = "tenant_id"

auth {

type = "Secret"

azure_client_secret_key {

secret_ref = "account.${harness_platform_secret_text.test.id}"

}

}

}

}

azure_environment_type = "AZURE"

delegate_selectors = ["harness-delegate"]

}

resource "harness_platform_connector_azure_cloud_provider" "manual_config_certificate" {

identifier = "identifier"

name = "name"

description = "example"

tags = ["foo:bar"]

credentials {

type = "ManualConfig"

azure_manual_details {

application_id = "application_id"

tenant_id = "tenant_id"

auth {

type = "Certificate"

azure_client_key_cert {

certificate_ref = "account.${harness_platform_secret_text.test.id}"

}

}

}

}

azure_environment_type = "AZURE"

delegate_selectors = ["harness-delegate"]

}

resource "harness_platform_connector_azure_cloud_provider" "inherit_from_delegate_user_assigned_managed_identity" {

identifier = "identifier"

name = "name"

description = "example"

tags = ["foo:bar"]

credentials {

type = "InheritFromDelegate"

azure_inherit_from_delegate_details {

auth {

azure_msi_auth_ua {

client_id = "client_id"

}

type = "UserAssignedManagedIdentity"

}

}

}

azure_environment_type = "AZURE"

delegate_selectors = ["harness-delegate"]

}

resource "harness_platform_connector_azure_cloud_provider" "inherit_from_delegate_system_assigned_managed_identity" {

identifier = "identifier"

name = "name"

description = "example"

tags = ["foo:bar"]

credentials {

type = "InheritFromDelegate"

azure_inherit_from_delegate_details {

auth {

type = "SystemAssignedManagedIdentity"

}

}

}

azure_environment_type = "AZURE"

delegate_selectors = ["harness-delegate"]

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to ACR using a Harness Azure Connector. For details on all the Azure requirements for the Azure Connector, see Add a Microsoft Azure Cloud Connector.

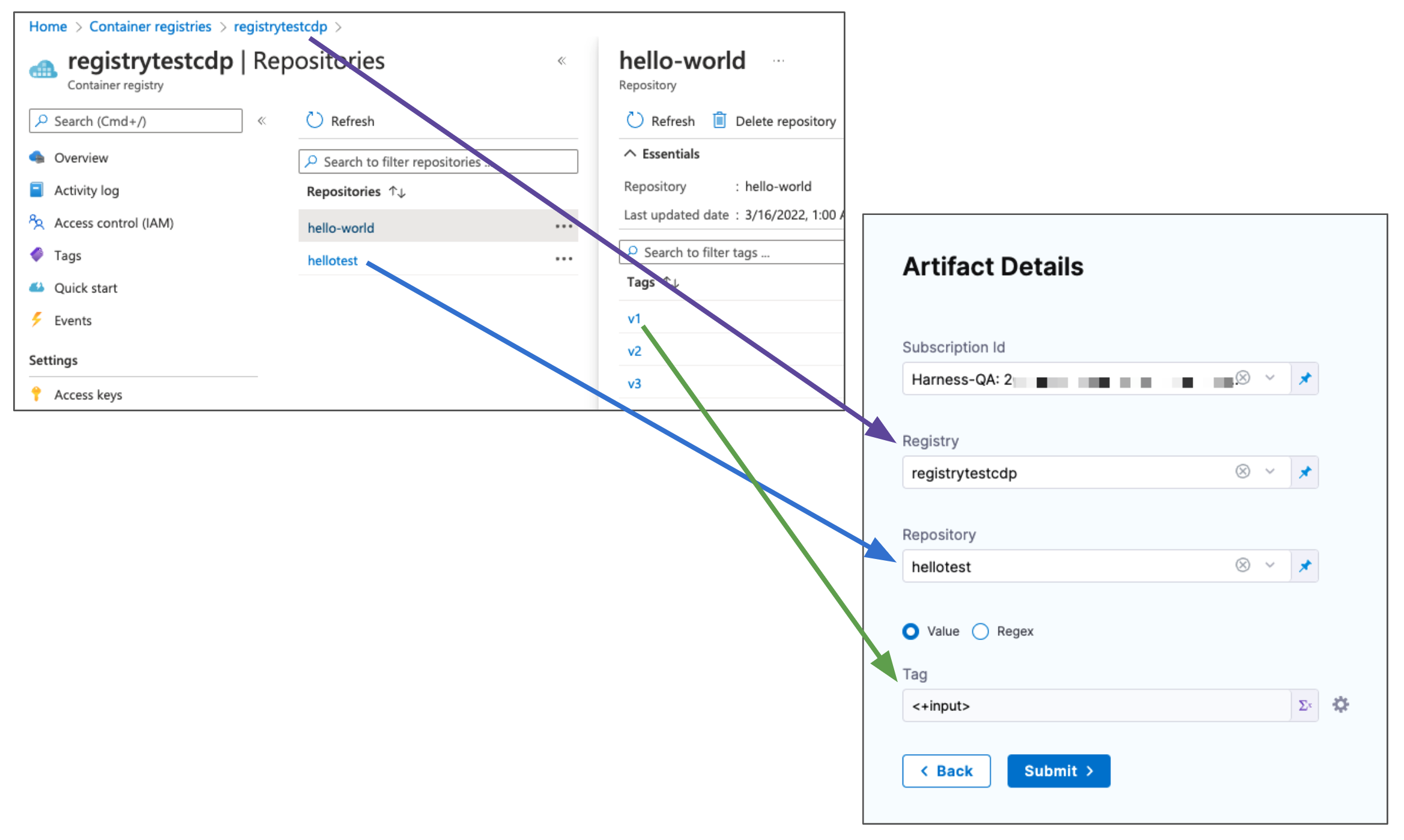

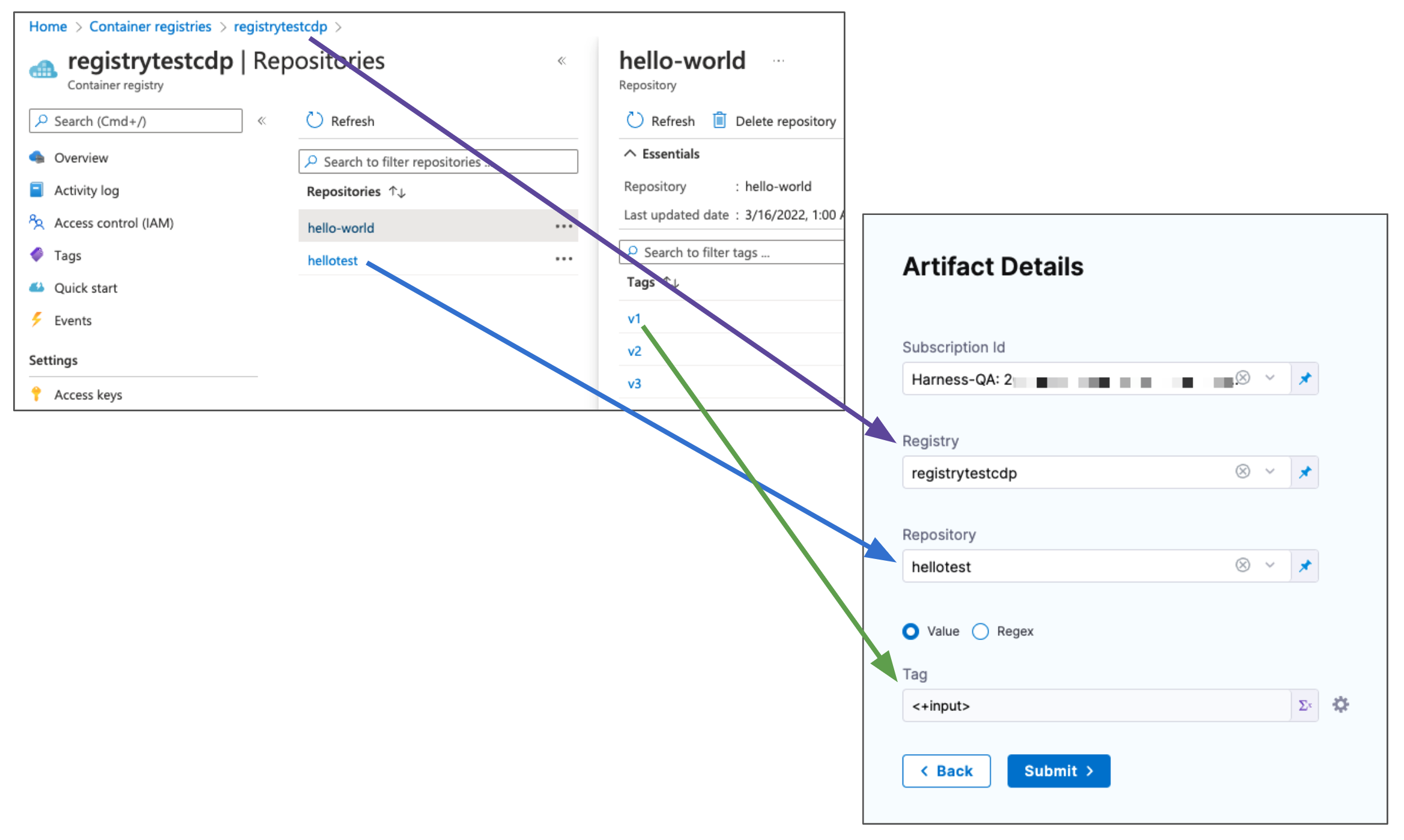

To add an artifact from ACR, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select Kubernetes.

In Artifacts, select Add Artifact Source.

In Artifact Repository Type, click ACR, and then select Continue.

In ACR Repository, select or create an Azure Connector that connects to the Azure account where the ACR registry is located.

Select Continue.

In Artifact Details, in Subscription Id, select the Subscription Id where the artifact source is located.

In Registry, select the ACR registry to use.

In Repository, select the repo to use.

In Tag, enter or select the tag for the image.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

Click Submit. The artifact is added to the Service Definition.

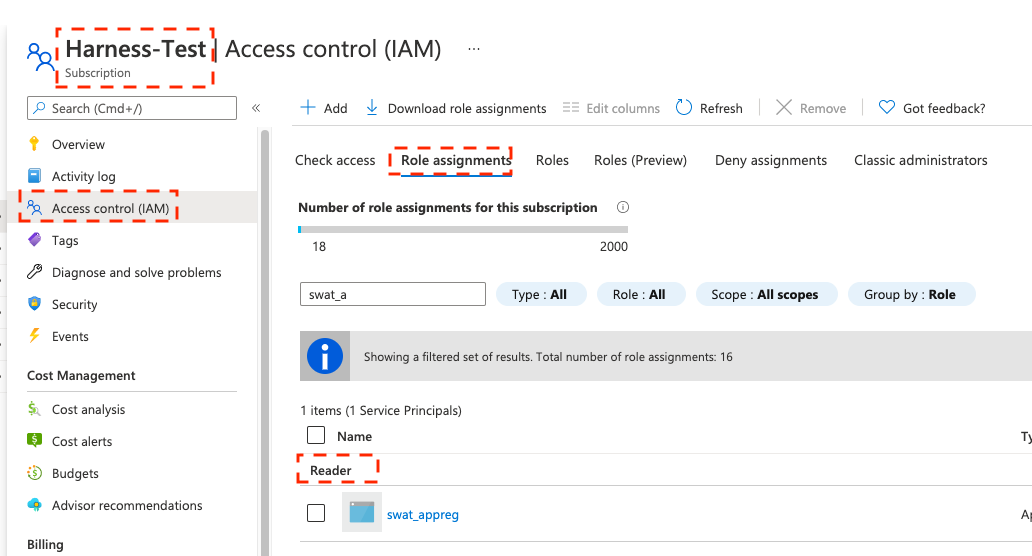

Permissions

The Harness Azure connectors that you'll use to connect Harness to ACR must have the Reader role, at minimum. You can also use a custom role that includes the permissions of the Reader role.

Reader role information

The Reader role must be assigned at the Subscription or Resource Group level that is used by the Application (Client) Id that you'll use in the Azure connector's settings. The application must have permission to list all container registries.

Make sure you:

- Don't put the Reader role in a different IAM section of Azure.

- Don't provide only the AcrPull role, instead of Reader. It might appear that the AcrPull role gives access to a specific registry, but Harness needs to list all registries.

Custom role information

The following permissions (actions) are necessary for any Service Principal and/or Managed Identity user, regardless of whether you are using Kubernetes RBAC or Azure RBAC:

Microsoft.ContainerRegistry/registries/readMicrosoft.ContainerRegistry/registries/builds/readMicrosoft.ContainerRegistry/registries/metadata/readMicrosoft.ContainerRegistry/registries/pull/readMicrosoft.ContainerService/managedClusters/readMicrosoft.ContainerService/managedClusters/listClusterUserCredential/actionMicrosoft.Resource/subscriptions/resourceGroup/read

For Helm deployments, the version of Helm must be >= 3.2.0. The Harness HELM_VERSION_3_8_0 feature flag must be activated.

You can't use Pod Assigned Managed Identity and System Assigned Managed Identity for the same cluster.

The following JSON sample creates a custom role with the required permissions. To use this sample, replace xxxx with the role name, subscription Id, and resource group Id.

{

"id": "/subscriptions/xxxx/providers/Microsoft.Authorization/roleDefinitions/xxxx",

"properties": {

"roleName": "xxxx",

"description": "",

"assignableScopes": [

"/subscriptions/xxxx/resourceGroups/xxxx"

],

"permissions": [

{

"actions": [],

"notActions": [],

"dataActions": [

"Microsoft.ContainerService/managedClusters/configmaps/read",

"Microsoft.ContainerService/managedClusters/configmaps/write",

"Microsoft.ContainerService/managedClusters/configmaps/delete",

"Microsoft.ContainerService/managedClusters/secrets/read",

"Microsoft.ContainerService/managedClusters/secrets/write",

"Microsoft.ContainerService/managedClusters/secrets/delete",

"Microsoft.ContainerService/managedClusters/apps/deployments/read",

"Microsoft.ContainerService/managedClusters/apps/deployments/write",

"Microsoft.ContainerService/managedClusters/apps/deployments/delete",

"Microsoft.ContainerService/managedClusters/events/read",

"Microsoft.ContainerService/managedClusters/events/write",

"Microsoft.ContainerService/managedClusters/events/delete",

"Microsoft.ContainerService/managedClusters/namespaces/read",

"Microsoft.ContainerService/managedClusters/nodes/read",

"Microsoft.ContainerService/managedClusters/pods/read",

"Microsoft.ContainerService/managedClusters/pods/write",

"Microsoft.ContainerService/managedClusters/pods/delete",

"Microsoft.ContainerService/managedClusters/services/read",

"Microsoft.ContainerService/managedClusters/services/write",

"Microsoft.ContainerService/managedClusters/services/delete",

"Microsoft.ContainerService/managedClusters/apps/statefulsets/read",

"Microsoft.ContainerService/managedClusters/apps/statefulsets/write",

"Microsoft.ContainerService/managedClusters/apps/statefulsets/delete",

"Microsoft.ContainerService/managedClusters/apps/replicasets/read",

"Microsoft.ContainerService/managedClusters/apps/replicasets/write",

"Microsoft.ContainerService/managedClusters/apps/replicasets/delete"

],

"notDataActions": []

}

]

}

}

Important notes

- Harness supports 500 images from an ACR repo. If you don't see some of your images, then you might have exceeded this limit. This is the result of an Azure API limitation.

- If you connect to an ACR repo via the platform-agnostic Docker Connector, the limit is 100.

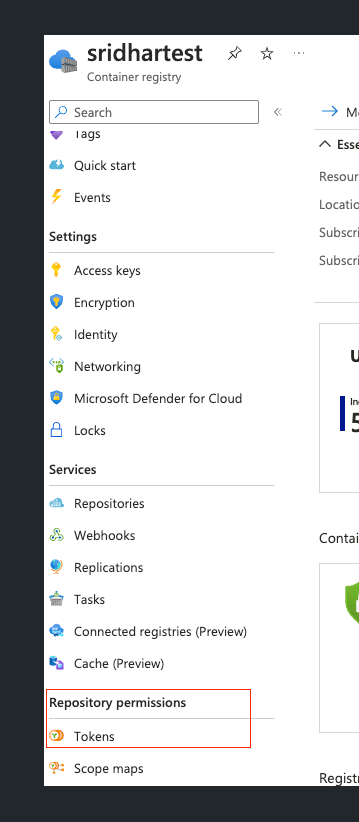

Use Docker Registry connector for ACR

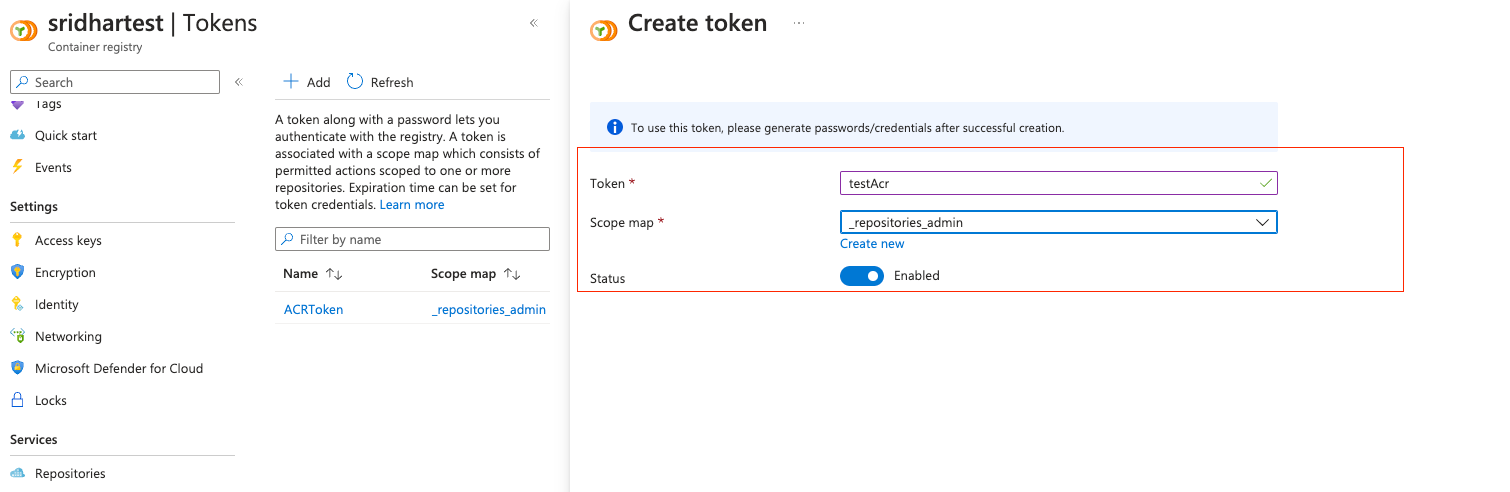

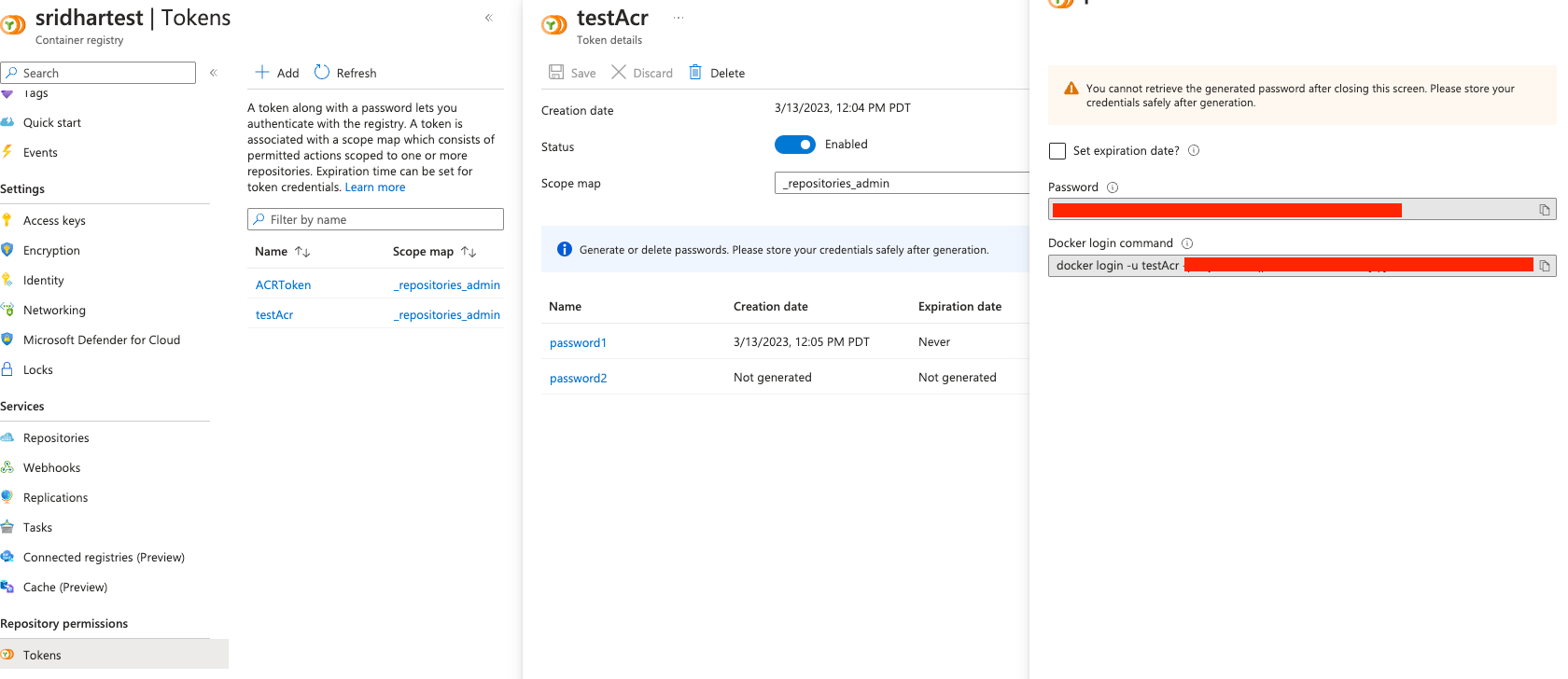

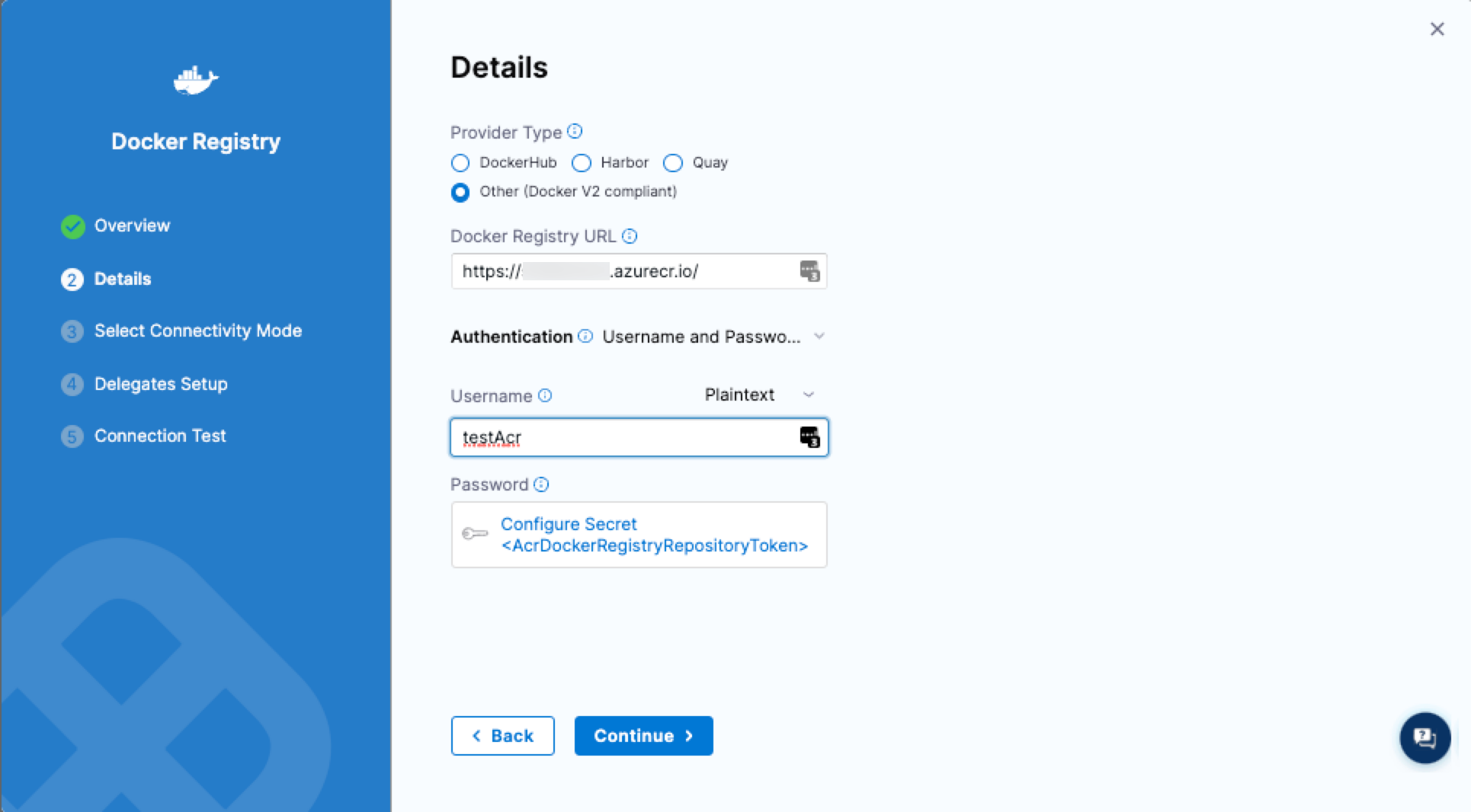

If you do not want to centrally manage service principles for access to ACR, you can use the platform-agnostic Docker Registry connector and repository-scoped permissions to connect Harness to ACR.

To use the Docker Registry connector to connect to ACR, do the following:

In Azure ACR, in Repository permissions, select Tokens.

Create a new token and scope map.

Generate the password for the token.

In Harness, create a new Docker Registry connector.

For Provider Type, select Other.

Select Username and Password for Authentication, and use the username and password for the ACR token.

Amazon Elastic Container Registry (ECR)

Use ECR artifacts

You connect to ECR using a Harness AWS connector. For details on all the ECR requirements for the AWS connector, see AWS Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Harness Manager

This example uses a Harness delegate installed in AWS for credentials.

ECR connector YAML

connector:

name: ECR

identifier: ECR

orgIdentifier: default

projectIdentifier: CD_Docs

type: Aws

spec:

credential:

type: ManualConfig

spec:

accessKey: xxxxx

secretKeyRef: secretaccesskey

region: us-east-1

delegateSelectors:

- doc-immut

executeOnDelegate: true

Service using ECR artifact YAML

service:

name: ECR

identifier: ECR

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /values.yaml

valuesPaths:

- /Templates

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: ECR

imagePath: todolist-sample

tag: "1.0"

region: us-east-1

identifier: myapp

type: Ecr

type: Kubernetes

Create the ECR connector using the Create a Connector API.

ECR connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: ECR

identifier: ECR

orgIdentifier: default

projectIdentifier: CD_Docs

type: Aws

spec:

credential:

type: ManualConfig

spec:

accessKey: xxxxx

secretKeyRef: secretaccesskey

region: us-east-1

delegateSelectors:

- doc-immut

executeOnDelegate: true'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider ECR connector resource, go to harness_platform_connector_aws.

ECR connector example

# Credential manual

resource "harness_platform_connector_aws" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

manual {

secret_key_ref = "account.secret_id"

delegate_selectors = ["harness-delegate"]

}

}

# Credentials inherit_from_delegate

resource "harness_platform_connector_aws" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

inherit_from_delegate {

delegate_selectors = ["harness-delegate"]

}

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to ECR using a Harness AWS Connector. For details on all the ECR requirements for the AWS Connector, see AWS Connector Settings Reference.

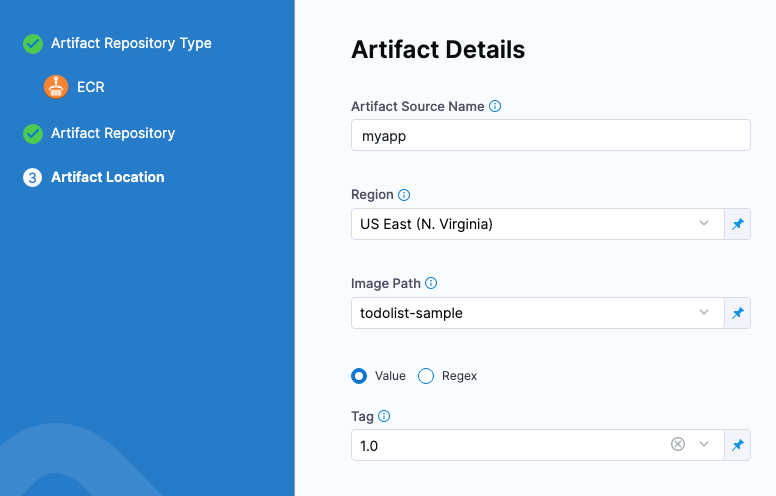

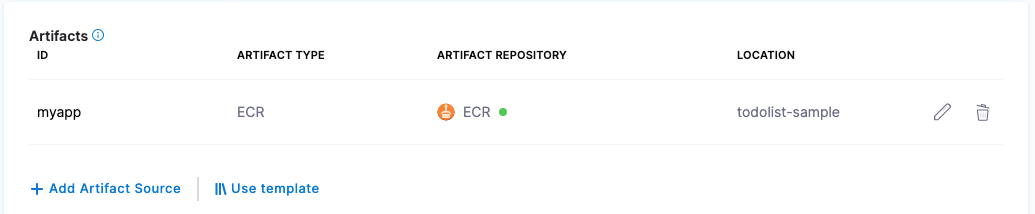

To add an artifact from ECR, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select Kubernetes.

In Artifacts, select Add Artifact Source.

In Artifact Repository Type, click ECR, and then select Continue.

In ECR Repository, select or create an AWS connector that connects to the AWS account where the ECR registry is located.

Select Continue.

In Artifact Details, in Region, select the region where the artifact source is located.

If the IAM user has access to the repository in the secondary account, in Registry ID, specify the AWS account ID associated with that repository.

noteOnly Tags can be fetched from the repository in the secondary account. You must specify the image path manually.

In Image Path, enter the name of the artifact you want to deploy.

In Tag, enter or select the Docker image tag for the image.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

To specify an image digest, use Digest and the unique identifier for the image you want to use. Specifying an image by tag and digest (rather than tag alone) is useful when you want to deploy an image with a fixed digest/SHA for your service.

noteThis option is behind the feature flag

CD_NG_DOCKER_ARTIFACT_DIGEST. Contact Harness Support to enable the feature.If an image with the specified tag/digest combination does not exist in the artifact registry, the pipeline will fail.

Select Submit. The Artifact is added to the Service Definition.

Permissions

Ensure that the AWS IAM user account you use in the AWS Connector has the following policy.

Pull from ECR policy

- Policy Name:

AmazonEC2ContainerRegistryReadOnly - Policy ARN:

arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly - Description:

Provides read-only access to Amazon EC2 Container Registry repositories. - Policy JSON:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:GetRepositoryPolicy",

"ecr:DescribeRepositories",

"ecr:ListImages",

"ecr:DescribeImages",

"ecr:BatchGetImage"

],

"Resource": "*"

}

]

}

Use Docker Registry for ECR

If you do not want to use the AWS connector for ECR, you can use the platform-agnostic Docker Registry connector.

Use the following settings:

Provider Type: select Other (Docker V2 compliant).

URL: Enter the same URL you would use in your push command.

For example, here is an ECR push command example:

docker push 1234567890.dkr.ecr.us-east-2.amazonaws.com/my-private-repo:123.Include the

https://scheme when you add the URL in URL.Your URL will look something like this:

https://1234567890.dkr.ecr.us-east-2.amazonaws.com.

Authentication:

Username: Enter

AWS. Do not enter an access key or user name.Password: Enter the password returned from the following command (replace

us-east-2with your region):aws ecr get-login-password --region us-east-2

Ensure that the AWS IAM user you use has the correct policies for pulling from ECR:

Pull from ECR policy

- Policy Name:

AmazonEC2ContainerRegistryReadOnly - Policy ARN:

arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly - Description:

Provides read-only access to Amazon EC2 Container Registry repositories. - Policy JSON:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:GetRepositoryPolicy",

"ecr:DescribeRepositories",

"ecr:ListImages",

"ecr:DescribeImages",

"ecr:BatchGetImage"

],

"Resource": "*"

}

]

}

Amazon S3 Cloud Storage

Use AWS artifacts

You connect to AWS using a Harness AWS connector. For details on all the AWS requirements for the connector, see AWS Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Harness Manager

This example uses a Harness delegate installed in AWS for credentials.

AWS connector YAML

connector:

name: jsmith-aws

identifier: jsmithaws

description: ""

orgIdentifier: default

projectIdentifier: jsmith_project

type: Aws

spec:

credential:

type: ManualConfig

spec:

accessKeyRef: jsmithawsaccesskeyid

secretKeyRef: jsmithawssecretaccesskey

region: us-east-1

executeOnDelegate: false

Service using S3 artifact YAML

service:

name: jsmith-aws-s3-test

identifier: jsmithawss3test

tags: {}

serviceDefinition:

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: jsmithaws

bucketName: jsmith-bucket

filePath: login-service.sh

identifier: jsmith_login_service_test_sh

type: AmazonS3

type: Ssh

Create the AWS connector using the Create a Connector API.

AWS connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: jsmith-aws

identifier: jsmithaws

description: ""

orgIdentifier: default

projectIdentifier: jsmith_project

type: Aws

spec:

credential:

type: ManualConfig

spec:

accessKeyRef: jsmithawsaccesskeyid

secretKeyRef: jsmithawssecretaccesskey

region: us-east-1

executeOnDelegate: false'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider AWS connector resource, go to harness_platform_connector_aws.

AWS connector example

# Credential manual

resource "harness_platform_connector_aws" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

manual {

secret_key_ref = "account.secret_id"

delegate_selectors = ["harness-delegate"]

}

}

# Credentials inherit_from_delegate

resource "harness_platform_connector_aws" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

inherit_from_delegate {

delegate_selectors = ["harness-delegate"]

}

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to AWS using a Harness AWS Connector. For details on all the AWS requirements for this Connector, see AWS Connector Settings Reference.

To add an artifact from an S3 bucket, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select Secure Shell.

In Artifacts, select Add Artifact Source.

In Artifact Repository Type, click Amazon S3, and then select Continue.

In AWS Connector, select or create an AWS connector that connects to the AWS account where the S3 bucket is located.

Select Continue.

In Artifact Details, specify the following:

- In Artifact Source Identifier, add a unique identifier. You can use the Harness expression

<+artifact.primary.identifier>to reference this setting in your pipelines. - in Region, select the region where the artifact source is located.

- In Bucket Name, select the bucket where the artifact is located

- In File path, enter the path (from the bucket root) and name of the artifact you want to deploy.

- In Artifact Source Identifier, add a unique identifier. You can use the Harness expression

Select Submit.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

Select Submit. The Artifact is added to the Service Definition.

Permissions

You need a dedicated S3 bucket for your artifacts and an AWS connector with read/write access to this bucket.

Sample S3 Cache Bucket Policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowS3BucketAccess",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:ListBucket",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::your-s3-bucket/*",

"arn:aws:s3:::your-s3-bucket"

]

},

{

"Sid": "AllowDescribeRegions",

"Effect": "Allow",

"Action": "ec2:DescribeRegions",

"Resource": "*"

}

]

}

For more information on configuring an S3 connector and S3 bucket policies, go to Add an AWS connector and the AWS connector settings reference.

Amazon EC2 AMIs

Use Amazon EC2 AMI artifacts

You connect to Amazon AWS account using a Harness AWS connector. For details on all the AWS requirements for the connector, see AWS Connector Settings Reference.

For AWS AMI artifacts, a version number represents the name of AMI. You can filter names by using tags/filter values.

- YAML

- API

- Terraform Provider

- Harness Manager

This example uses a Harness delegate installed in AWS for credentials.

AWS connector YAML

connector:

name: jsmith-aws

identifier: jsmithaws

description: ""

orgIdentifier: default

projectIdentifier: jsmith_project

type: Aws

spec:

credential:

type: ManualConfig

spec:

accessKeyRef: jsmithawsaccesskeyid

secretKeyRef: jsmithawssecretaccesskey

region: us-east-1

executeOnDelegate: false

Service using EC2 AMI YAML

service:

name: jsmith-delegate-ami-test

identifier: jsmithdelegateamitest

tags: {}

serviceDefinition:

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- identifier: macos_build_farm_for_ci

spec:

connectorRef: jsmithaws

region: us-east-1

tags:

- name: Version

value: ""

filters:

- name: ami-image-id

value: ami-xxxxxxxxxxxxxxxxx

version: macos-build-farm-for-ci

type: AmazonMachineImage

type: Asg

Create the AWS connector using the Create a Connector API.

AWS connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: jsmith-aws

identifier: jsmithaws

description: ""

orgIdentifier: default

projectIdentifier: jsmith_project

type: Aws

spec:

credential:

type: ManualConfig

spec:

accessKeyRef: jsmithawsaccesskeyid

secretKeyRef: jsmithawssecretaccesskey

region: us-east-1

executeOnDelegate: false'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider AWS connector resource, go to harness_platform_connector_aws.

AWS connector example

# Credential manual

resource "harness_platform_connector_aws" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

manual {

secret_key_ref = "account.secret_id"

delegate_selectors = ["harness-delegate"]

}

}

# Credentials inherit_from_delegate

resource "harness_platform_connector_aws" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

inherit_from_delegate {

delegate_selectors = ["harness-delegate"]

}

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to AWS using a Harness AWS Connector. For details on all the AWS requirements for this Connector, see AWS Connector Settings Reference.

To add an artifact from an S3 bucket, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select AWS Auto Scaling Group.

In Artifacts, select Add Artifact Source.

In Artifact Repository Type, click Amazon Machine Image, and then select Continue.

In AWS Connector, select or create an AWS connector that connects to the AWS account where the AMI is located.

Select Continue.

In Artifact Details, specify the following:

- In Artifact Source Identifier, add a unique identifier. You can use the Harness expression

<+artifact.primary.identifier>to reference this setting in your pipelines. - in Region, select the region where the AMI is located.

- Set the AMI Tags and/or AMI Filters to specify the AMI you want to use for the service artifact.

- In Version, select the AMI you want to deploy. The pull-down list is populated based on the specified region, tags, and filters.

- In Artifact Source Identifier, add a unique identifier. You can use the Harness expression

Select Submit.

The Artifact is added to the Service Definition.

Nexus

Use Nexus artifacts

You connect to Nexus using a Harness Nexus Connector. For details on all the requirements for the Nexus Connector, see Nexus Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Harness Manager

Nexus connector YAML

connector:

name: Harness Nexus

identifier: Harness_Nexus

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: HttpHelmRepo

spec:

helmRepoUrl: https://nexus3.dev.harness.io/repository/test-helm/

auth:

type: UsernamePassword

spec:

username: harnessadmin

passwordRef: nexus3pwd

delegateSelectors:

- gcpdocplay

Service using Nexus artifact YAML

service:

name: Nexus Example

identifier: Nexus_Example

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: account.Harness_Nexus

repository: todolist

repositoryFormat: docker

tag: "4.0"

spec:

artifactPath: nginx

repositoryPort: "6661"

identifier: myapp

type: Nexus3Registry

type: Kubernetes

Create the Nexus connector using the Create a Connector API.

Nexus connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: Harness Nexus

identifier: Harness_Nexus

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: HttpHelmRepo

spec:

helmRepoUrl: https://nexus3.dev.harness.io/repository/test-helm/

auth:

type: UsernamePassword

spec:

username: harnessadmin

passwordRef: nexus3pwd

delegateSelectors:

- gcpdocplay'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider Nexus connector resource, go to harness_platform_connector_nexus.

Nexus connector example

# Credentials username password

resource "harness_platform_connector_nexus" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

url = "https://nexus.example.com"

delegate_selectors = ["harness-delegate"]

version = "3.x"

credentials {

username = "admin"

password_ref = "account.secret_id"

}

}

# Credentials anonymous

resource "harness_platform_connector_nexus" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

url = "https://nexus.example.com"

version = "version"

delegate_selectors = ["harness-delegate"]

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to Nexus using a Harness Nexus Connector. For details on all the requirements for the Nexus Connector, see Nexus Connector Settings Reference.

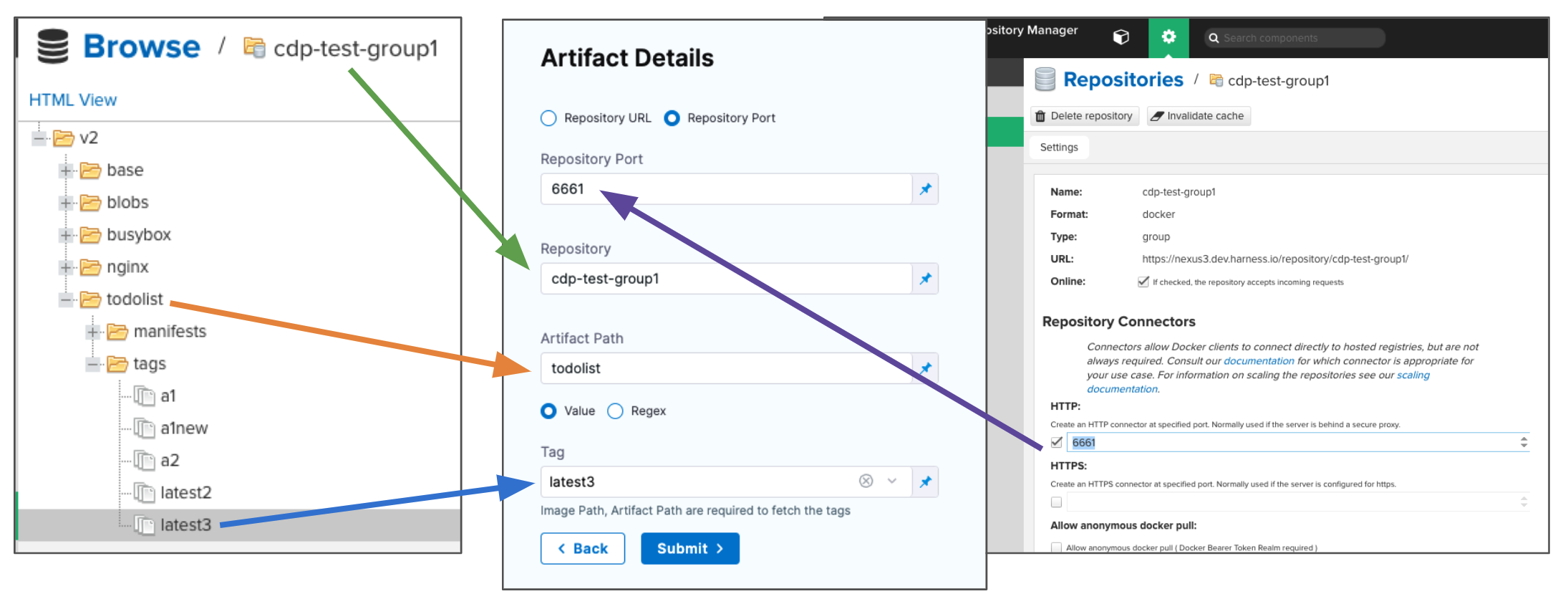

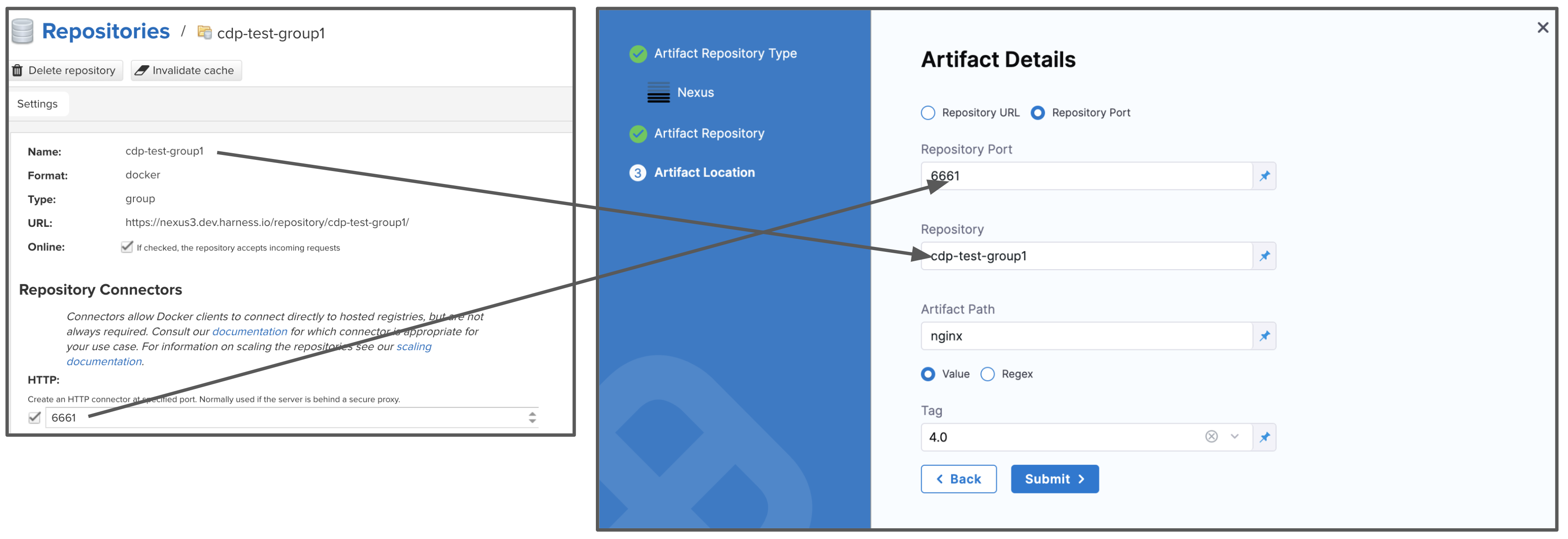

To add an artifact from Nexus, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select Kubernetes.

In Artifacts, click Add Artifact Source.

In Artifact Repository Type, click Nexus, and then select Continue.

In Nexus Repository, select of create a Nexus Connector that connects to the Nexus account where the repo is located.

Select Continue.

Select Repository URL or Repository Port.

- Repository Port is more commonly used and can be taken from the repo settings. Each repo uses its own port.

- Repository URL is typically used for a custom infrastructure (for example, when Nexus is hosted behind a reverse proxy).

In Repository, enter the name of the repo.

In Artifact Path, enter the path to the artifact you want.

In Tag, enter or select the Docker image tag for the image.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

To specify an image digest, use Digest and the unique identifier for the image you want to use. Specifying an image by tag and digest (rather than tag alone) is useful when you want to deploy an image with a fixed digest/SHA for your service.

noteThis option is behind the feature flag

CD_NG_DOCKER_ARTIFACT_DIGEST. Contact Harness Support to enable the feature.If an image with the specified tag/digest combination does not exist in the artifact registry, the pipeline will fail.

Select Submit. The Artifact is added to the Service Definition.

Permissions

Ensure the connected user account has the following permissions in the Nexus Server.

- Repo: All repositories (Read)

- Nexus UI: Repository Browser

For Nexus 3, when used as a Docker repo, the user needs:

- A role with the

nx-repository-view-*_*_*privilege.

Artifactory

Use Artifactory artifacts

You connect to Artifactory (JFrog) using a Harness Artifactory Connector. For details on all the requirements for the Artifactory Connector, see Artifactory Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Harness Manager

Artifactory connector YAML

connector:

name: artifactory-tutorial-connector

identifier: artifactorytutorialconnector

orgIdentifier: default

projectIdentifier: CD_Docs

type: Artifactory

spec:

artifactoryServerUrl: https://harness.jfrog.io/artifactory/

auth:

type: Anonymous

executeOnDelegate: false

Service using Artifactory artifact YAML

service:

name: Artifactory Example

identifier: Artifactory_Example

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: artifactorytutorialconnector

artifactPath: alpine

tag: 3.14.2

digest: sha256:xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

repository: bintray-docker-remote

repositoryUrl: harness-docker.jfrog.io

repositoryFormat: docker

identifier: myapp

type: ArtifactoryRegistry

type: Kubernetes

Create the Artifactory connector using the Create a Connector API.

Artifactory connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: artifactory-tutorial-connector

identifier: artifactorytutorialconnector

orgIdentifier: default

projectIdentifier: CD_Docs

type: Artifactory

spec:

artifactoryServerUrl: https://harness.jfrog.io/artifactory/

auth:

type: Anonymous

executeOnDelegate: false'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider Artifactory connector resource, go to harness_platform_connector_artifactory.

Artifactory connector example

# Authentication mechanism as username and password

resource "harness_platform_connector_artifactory" "example" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

org_id = harness_platform_project.test.org_id

project_id = harness_platform_project.test.id

url = "https://artifactory.example.com"

delegate_selectors = ["harness-delegate"]

credentials {

username = "admin"

password_ref = "account.secret_id"

}

}

# Authentication mechanism as anonymous

resource "harness_platform_connector_artifactory" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

org_id = harness_platform_project.test.org_id

project_id = harness_platform_project.test.id

url = "https://artifactory.example.com"

delegate_selectors = ["harness-delegate"]

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to Artifactory (JFrog) using a Harness Artifactory Connector. For details on all the requirements for the Artifactory Connector, go to Artifactory Connector Settings Reference.

To add an artifact from Artifactory, do the following:

In your project, in CD (Deployments), select Services.

Select Manage Services, and then select New Service.

Enter a name for the service and select Save.

Select Configuration.

In Service Definition, select Kubernetes.

In Artifacts, select Add Artifact Source.

In Artifact Repository Type, select Artifactory, and then select Continue.

In Artifactory Repository, select of create an Artifactory Connector that connects to the Artifactory account where the repo is located. Click Continue.

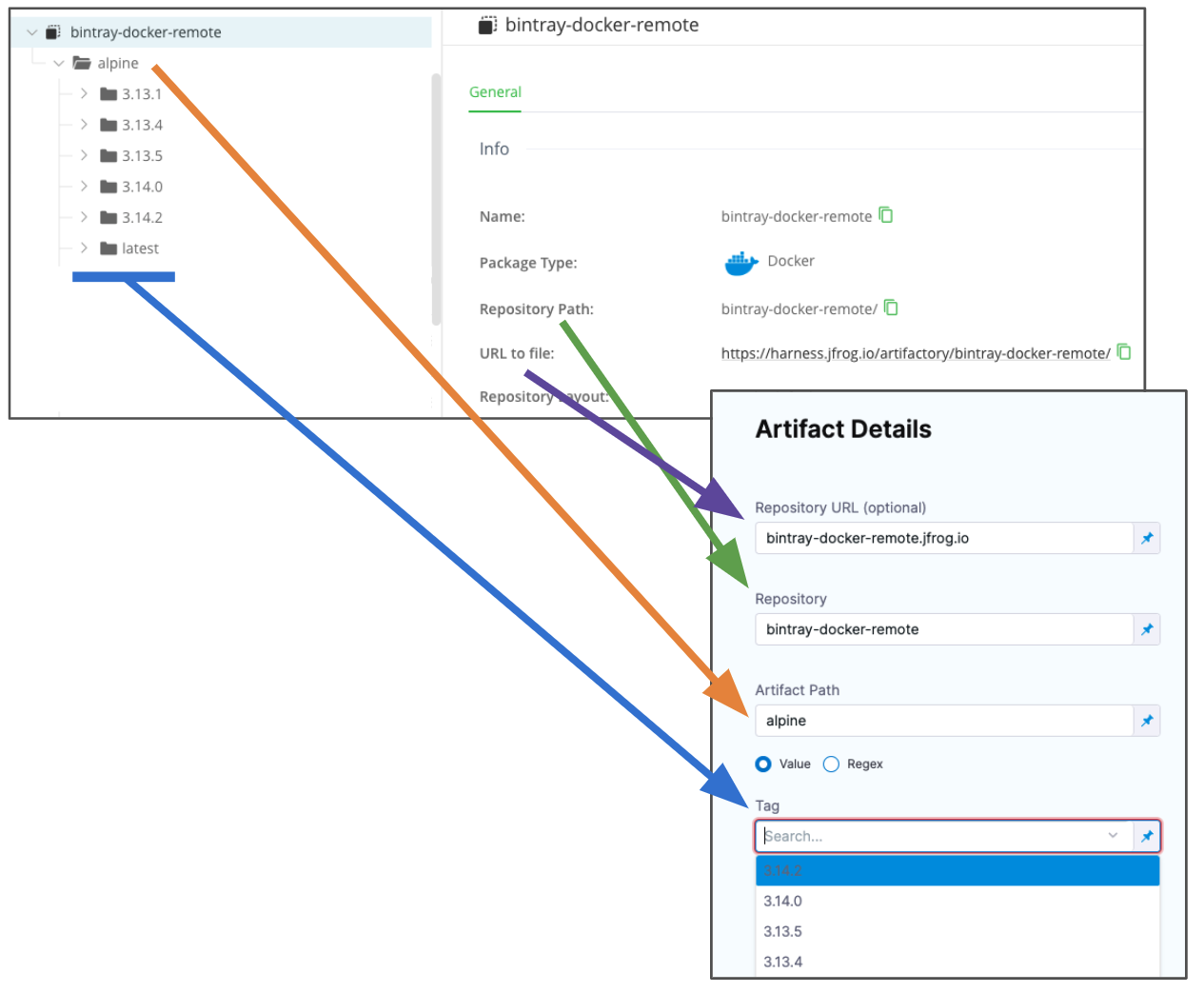

The Artifact Details settings appear.

In Repository URL, enter the URL from the

docker logincommand in Artifactory's Set Me Up settings.

In Repository, enter the repo name. If the full path is

docker-remote/library/mongo/3.6.2, you would enterdocker-remote.In Artifact Path, enter the path to the artifact. If the full path is

docker-remote/library/mongo/3.6.2, you would enterlibrary/mongo.In Tag, enter or select the Docker image tag for the image.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

To specify an image digest, use Digest and the unique identifier for the image you want to use. Specifying an image by tag and digest (rather than tag alone) is useful when you want to deploy an image with a fixed digest/SHA for your service.

noteThis option is behind the feature flag

CD_NG_DOCKER_ARTIFACT_DIGEST. Contact Harness Support to enable the feature.If an image with the specified tag/digest combination does not exist in the artifact registry, the pipeline will fail.

Select Submit. The Artifact is added to the Service Definition.

Permissions

Make sure the following permissions are granted to the user:

- Privileged User is required to access API, whether Anonymous or a specific username (username and passwords are not mandatory).

- Read permission to all Repositories.

If used as a Docker Repo, user needs:

- List images and tags

- Pull images

Bamboo

Currently, Bamboo support is behind the feature flag BAMBOO_ARTIFACT_NG. Contact Harness Support to enable the feature.

Use Bamboo build plans as artifacts

You can use Bamboo for build plans when performing deployments using the SSH/WinRM, Serverless.com Framework, and Deployment Templates deployment types. You can also trigger deployments based on new build plans in Bamboo.

You connect to Bamboo using a Harness Bamboo connector.

- YAML

- API

- Terraform Provider

- Harness Manager

Bamboo connector YAML

connector:

name: Bamboo

identifier: Bamboo

description: ""

orgIdentifier: default

projectIdentifier: Docs

type: Bamboo

spec:

bambooUrl: https://bamboo.dev.example.io

auth:

type: UsernamePassword

spec:

username: admin

passwordRef: bamboo

delegateSelectors:

- mydelegate

Service using Bamboo plans YAML

service:

name: Bamboo

identifier: Bamboo

tags: {}

serviceDefinition:

spec:

artifacts:

primary:

primaryArtifactRef: myplan

sources:

- identifier: myplan

spec:

connectorRef: Bamboo

artifactPaths: artifacts

build: 133

planKey: PLAN1

type: Bamboo

type: Ssh

Create the Bamboo connector using the Create a Connector API.

Bamboo connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: Bamboo

identifier: Bamboo

description: ""

orgIdentifier: default

projectIdentifier: Docs

type: Bamboo

spec:

bambooUrl: https://bamboo.dev.example.io

auth:

type: UsernamePassword

spec:

username: admin

passwordRef: bamboo

delegateSelectors:

- mydelegate'

Create a service with an artifact source that uses the connector using the Create Services API.

The Terraform Provider Bamboo connector resource is coming soon.

To add a plan from Bamboo, do the following:

- In your project, in CD (Deployments), select Services.

- Select Manage Services, and then select New Service.

- Enter a name for the service and select Save.

- Select Configuration.

- In Service Definition, select Secure Shell or one of the other deployment types that support Bamboo.

- In Artifacts, select Add Artifact Source.

- In Artifact Repository Type, select Bamboo, and then select Continue.

- In Bamboo Repository, select of create a Bamboo connector that connects to the Bamboo account where the plan is located. Click Continue. The Artifact Details settings appear.

- In Artifact Source Identifier, enter a name for this artifact.

- In Plan Name, select the name of the plan.

- In Artifact Paths, select the artifact path for the plan.

- In Bamboo Builds, select the plan to use.

- Select Submit. The artifact is added to the service definition.

Build plan permissions

Make sure the connected user account has the following required permissions to the Bamboo Server.

- View plan.

- Build plan (if you plan to trigger a build as part of your pipeline).

For more information, go to Bamboo Permissions.

Github packages

Use Github packages as artifacts

You can use Github Packages as artifacts for deployments.

Package type (packageType) support:

- Docker

- NPM

- Maven

- Nuget

Currently, support for NPM, Maven, and Nuget are behind the feature flag CDS_GITHUB_PACKAGES. Contact Harness Support to enable the feature.

You connect to GitHub using a Harness GitHub connector, username, and personal access token (PAT).

New to Github Packages? This quick video will get you up to speed in minutes.

- YAML

- API

- Terraform Provider

- Harness Manager

GitHub Packages connector YAML

connector:

name: GitHub Packages

identifier: GitHub_Packages

orgIdentifier: default

projectIdentifier: CD_Docs

type: Github

spec:

url: https://github.com/johndoe/myapp.git

validationRepo: https://github.com/johndoe/test.git

authentication:

type: Http

spec:

type: UsernameToken

spec:

username: johndoe

tokenRef: githubpackages

apiAccess:

type: Token

spec:

tokenRef: githubpackages

delegateSelectors:

- gcpdocplay

executeOnDelegate: true

type: Repo

Service using Github Packages artifact YAML

service:

name: Github Packages

identifier: Github_Packages

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- identifier: myapp

spec:

connectorRef: GitHub_Packages

org: ""

packageName: tweetapp

packageType: container

version: latest

type: GithubPackageRegistry

type: Kubernetes

Create the Github connector using the Create a Connector API.

Github connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: GitHub Packages

identifier: GitHub_Packages

orgIdentifier: default

projectIdentifier: CD_Docs

type: Github

spec:

url: https://github.com/johndoe/myapp.git

validationRepo: https://github.com/johndoe/test.git

authentication:

type: Http

spec:

type: UsernameToken

spec:

username: johndoe

tokenRef: githubpackages

apiAccess:

type: Token

spec:

tokenRef: githubpackages

delegateSelectors:

- gcpdocplay

executeOnDelegate: true

type: Repo'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider Github connector resource, go to harness_platform_connector_github.

Github connector example

resource "harness_platform_connector_github" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

url = "https://github.com/account"

connection_type = "Account"

validation_repo = "some_repo"

delegate_selectors = ["harness-delegate"]

credentials {

http {

username = "username"

token_ref = "account.secret_id"

}

}

}

resource "harness_platform_connector_github" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

url = "https://github.com/account"

connection_type = "Account"

validation_repo = "some_repo"

delegate_selectors = ["harness-delegate"]

credentials {

http {

username = "username"

token_ref = "account.secret_id"

}

}

api_authentication {

token_ref = "account.secret_id"

}

}

resource "harness_platform_connector_github" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

url = "https://github.com/account"

connection_type = "Account"

validation_repo = "some_repo"

delegate_selectors = ["harness-delegate"]

credentials {

http {