Google Cloud Functions deployments

This topic explains how to deploy new Cloud Functions to Google Cloud using Harness.

Supported versions

Harness integration with Google's serverless offering Google Functions.

Harness supports the following:

- Harness supports deploying Google Functions 1st gen and 2nd gen.

- To review the differences between Google Functions 1st gen and 2nd gen, go to Google Cloud Function documentation.

Harness Cloud Functions 1st gen support

Harness supports the following:

- Basic deployments.

- Harness deploys the new function and terminates the old one by sending 100% of traffic to the new function.

- For rollback, Harness does not perform revision-based rollback. Instead, in case of deployment failure, Harness will take a snapshot of the last known good state of the function and reapply.

Harness Cloud Functions 2nd gen support

Harness supports the following:

- Basic, blue green, and canary deployments.

- Harness leverages the Cloud Run revisions capability that Google Cloud offers to configure rollback.

Cloud Functions limitations

- For Google Cloud Functions 2nd gen, Harness does not support Google Cloud Source Repository at this time. Only Google Cloud Storage is supported.

- For Google Cloud Functions 1st gen, Harness supports both Google Cloud Storage and Google Cloud Source.

Deployment summary

Here's a high-level summary of the setup steps.

Harness setup summary

- Create a Harness CD pipeline.

- Add a Deploy stage.

- Select the deployment type Google Cloud Functions, and then select Set Up Stage.

- Select Add Service.

- Add the function definition to the new Cloud Function service. You can paste in the YAML or link to a Git repo hosting the YAML.

- Save the new service.

- Select New Environment, name the new environment and select Save.

- In Infrastructure Definition, select New Infrastructure.

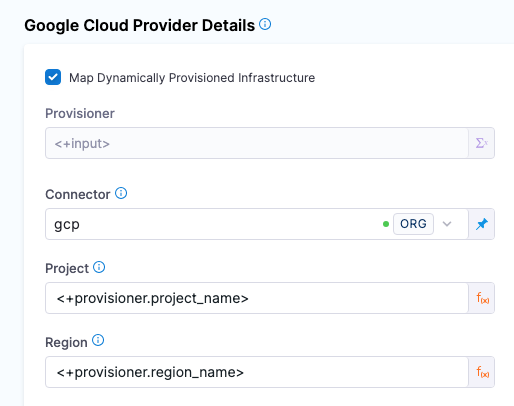

- In Google Cloud Provider Details, create or select the Harness GCP connector, GCP project, and GCP region, and select Save.

- Select Configure and select the deployment strategy: basic, canary, or blue green.

- Harness will automatically add the Deploy Cloud Function step. No further configuration is needed.

- For canary and blue green strategies, the Cloud Function Traffic Shift step is also added. In this step's Traffic Percent setting, enter the percentage of traffic to switch to the new revision of the function.

- For canary deployments, you can add multiple Cloud Function Traffic Shift steps to rollout the shift.

- For blue green deployments, you can simply use 100 in the Traffic Percent setting.

- Select Save, and then run the pipeline.

Cloud Functions permission requirements

Harness supports Google Cloud Functions 1st and 2nd gen. There are minor differences in the permissions required by each generation. For a detailed breakdown, go to Access control with IAM from Google.

The permissions listed below work for both 1st and 2nd gen.

When you set up a Harness GCP connector to connect Harness with your GCP account, the GCP IAM user or service account must have the appropriate permissions assigned to their account.

Cloud Functions minimum permissions

Cloud Functions supports the basic roles of Editor, Admin, Developer, and Viewer. For details, go to Cloud Functions IAM Roles.

You can use the Admin role or the permissions below.

Here are the minimum permissions required for deploying Cloud Functions.

- Cloud Functions API:

cloudfunctions.functions.create: Allows the user to create new functions.cloudfunctions.functions.update: Allows the user to update existing functions.cloudfunctions.functions.list: Allows the user to list existing functions.cloudfunctions.operations.get: Allows the user to get the status of a function deployment.

- Cloud Run API:

run.services.create: Allows the user to create new Cloud Run services.run.services.update: Allows the user to update existing Cloud Run services.run.services.get: Allows the user to get information about a Cloud Run service.run.revisions.create: Allows the user to create new revisions for a Cloud Run service.run.revisions.get: Allows the user to get information about a revision of a Cloud Run service.run.routes.create: Allows the user to create new routes for a Cloud Run service.run.routes.get: Allows the user to get information about a route of a Cloud Run service.

Note that these are the minimum set of permissions required for deploying Cloud Functions and running them on Cloud Run via API. Depending on your use case, you may need additional permissions for other GCP services such as Cloud Storage, Pub/Sub, or Cloud Logging.

Also, note that in order to call the Cloud Functions and Cloud Run APIs, the user or service account must have appropriate permissions assigned for calling the APIs themselves. This may include permissions like cloudfunctions.functions.getIamPolicy, run.services.getIamPolicy, cloudfunctions.functions.testIamPermissions, run.services.testIamPermissions, and iam.serviceAccounts.actAs.

For details, go to Cloud Functions API IAM permissions and Cloud Functions Access control with IAM.

Harness will also pull the function ZIP file in your Google Cloud Storage.

For Google Cloud Storage (GCS), the following roles are required:

- Storage Object Viewer (

roles/storage.objectViewer) - Storage Object Admin (

roles/storage.objectAdmin)

For more information, go to the GCP documentation about Cloud IAM roles for Cloud Storage.

Ensure the Harness delegate you have installed can reach storage.cloud.google.com and your GCR registry host name, for example gcr.io.

For Google Cloud Source, the following roles are required:

- Source Repository Reader (

roles/source.reader) - Source Repository Reader (

roles/cloudsource.writer)

For more information, go to Cloud Source Repositories Access control with IAM.

Cloud Functions services

The Harness Cloud Functions service the following:

- Function Definition:

- You can enter the function manifest YAML in Harness for use a remote function manifest YAML in a Git repo.

- Artifacts:

- You add a connection to the function ZIP file in Google Cloud Storage (1st and 2nd gen) or Google Cloud Source (1st gen).

How the Function Definition and Artifact work together

The function manifest YAML in Function Definition and the function zip file in Artifacts work together to define and deploy a Google Cloud Function.

The function manifest YAML file is a configuration file that defines the function's name, runtime environment, entry point, and other configuration options. The YAML file is used to specify the function's metadata, but it does not include the function's code.

The function code is packaged in a zip file that contains the function's source code, dependencies, and any other necessary files. The zip file must be created based on the manifest file, which specifies the entry point and runtime environment for the function.

When you deploy a function using the manifest file, the Cloud Functions service reads the configuration options from the YAML file and uses them to create the function's deployment package. The deployment package includes the function code (from the zip file), the runtime environment, and any dependencies or configuration files specified in the manifest file.

Function Definition

The Function Definition is the parameter file you use to define your Google Function. The parameter file you add here maps to one Google Function.

To use Google Cloud Functions, in the Harness service, in Deployment Type, you select Google Cloud Functions.

Next, in Google Cloud Function Environment Version, you select 1st gen or 2nd gen.

- Google Functions 2nd gen: The YAML parameters for Google Functions 2nd gen are defined in the google.cloud.functions.v2 function message from Google Cloud.

- You can define details of the ServiceConfig and BuildConfig via the YAML as seen in the below examples.

- Google Functions 1st gen: The YAML parameters for Google Functions 1st gen are defined in the google.cloud.functions.v1 CloudFunction message from Google Cloud.

1st gen function definition example

Here is a Function Definition example.

function:

name: my-function

runtime: python37

entryPoint: my_function

httpsTrigger:

securityLevel: SECURE_OPTIONAL

In httpsTrigger, you do not need to specify url.

2nd gen function definition examples

Here are some Function Definition examples.

Sample Google Function definition included in Harness

# The following are the minimum set of parameters required to create a Google Cloud Function.

# If you use a remove manifest, please ensure it includes all of these parameters.

function:

name: <functionName>

buildConfig:

runtime: nodejs18

entryPoint: helloGET

environment: GEN_2

function_id: <functionName>

Example 1: A basic Cloud Function that triggers on an HTTP request

function:

name: my-http-function

buildConfig:

runtime: nodejs14

entryPoint: myFunction

environment: GEN_2

function_id: my-http-function

Example 2: A Cloud Function that uses environment variables

function:

name: my-env-function

buildConfig:

runtime: python38

entryPoint: my_function

environment: GEN_2

function_id: my-env-function

environmentVariables:

MY_VAR: my-value

Example 3: A Cloud Function that uses Cloud Storage as a trigger

function:

name: my-storage-function

buildConfig:

runtime: go111

entryPoint: MyFunction

environment: GEN_2

function_id: my-storage-function

trigger:

eventType: google.storage.object.finalize

resource: projects/_/buckets/my-bucket

availableMemoryMb: 512

timeout: 180s

Example 4: A Cloud Function that uses service config and environment variables

function:

name: canaryDemo-<+env.name>

serviceConfig:

environment_variables:

MY_ENV_VAR: 'True'

GCF_FF_KEY: '<+env.variables.GCF_FF_KEY>'

buildConfig:

runtime: python39

entryPoint: hello_world

environment: GEN_2

function_id: canaryDemo-<+env.name>

Example 5: A Cloud Function that uses secret environment variables

This example uses the secret_environment_variables parameter. It has the information necessary to fetch the secret value from secret manager and expose it as an environment variable. For more information, go to SecretEnvVar in Google docs.

# The following are the minimum set of parameters required to create a Google Cloud Function.

# If you use a remove manifest, please ensure it includes all of these parameters.

function:

name: "my-secret-env-func"

description: "Using Secret Environment Variables"

region: "us-east1"

runtime: "nodejs16"

entryPoint: myFunction

max_instances: 1

eventTrigger:

event_type: "providers/cloud.pubsub/eventTypes/topic.publish"

resource: "projects/<project>/topics/<topic>"

environment_variables:

MY_ENV_VAR1: value1

MY_ENV_VAR2: value2

secret_environment_variables: [{ key: "MY_SECERT_ENV_VAR1", project_id: <project_id>, secret: "<secretName1>", version: "<secretVersion1>" }, { key: "MY_SECERT_ENV_VAR2", project_id: <project_id>, secret: "<secretName2>", version: "<secretVersion2>" }]

Artifacts

In Artifacts, you add the location of the function ZIP file in Google Cloud Storage that corresponds to the YAML manifest file you added in Function Definition.

You use a Harness GCP connector to connect to your Cloud Storage bucket. The GCP connector credentials should meet the requirements in Cloud Functions permission requirements.

Adding a Cloud Function service

Here's how you add a Harness Cloud Function service.

- YAML

- API

- Terraform Provider

- Harness Manager

Here's a Cloud Functions service YAML example.

service:

name: helloworld

identifier: Google_Function

serviceDefinition:

type: GoogleCloudFunctions

spec:

manifests:

- manifest:

identifier: GoogleFunction

type: GoogleCloudFunctionDefinition

spec:

store:

type: Harness

spec:

files:

- /GoogleFunctionDefinition.yaml

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: gcp_connector

project: cd-play

bucket: cloud-functions-automation-bucket

artifactPath: helloworld

identifier: helloworld

type: GoogleCloudStorage

Create a service using the Create Services API.

curl -i -X POST \

'https://app.harness.io/gateway/ng/api/servicesV2/batch?accountIdentifier=<Harness account Id>' \

-H 'Content-Type: application/json' \

-H 'x-api-key: <Harness API key>' \

-d '[{

"identifier": "svcasg",

"orgIdentifier": "default",

"projectIdentifier": "CD_Docs",

"name": "svc-asg",

"description": "string",

"tags": {

"property1": "string",

"property2": "string"

},

"yaml": "service:\n name: helloworld\n identifier: Google_Function\n serviceDefinition:\n type: GoogleCloudFunctions\n spec:\n manifests:\n - manifest:\n identifier: GoogleFunction\n type: GoogleCloudFunctionDefinition\n spec:\n store:\n type: Harness\n spec:\n files:\n - /GoogleFunctionDefinition.yaml\n artifacts:\n primary:\n primaryArtifactRef: <+input>\n sources:\n - spec:\n connectorRef: gcp_connector\n project: cd-play\n bucket: cloud-functions-automation-bucket\n artifactPath: helloworld\n identifier: helloworld\n type: GoogleCloudStorage"

}]'

For the Terraform Provider resource, go to harness_platform_service.

resource "harness_platform_service" "example" {

identifier = "identifier"

name = "name"

description = "test"

org_id = "org_id"

project_id = "project_id"

## SERVICE V2 UPDATE

## We now take in a YAML that can define the service definition for a given Service

## It isn't mandatory for Service creation

## It is mandatory for Service use in a pipeline

yaml = <<-EOT

service:

name: helloworld

identifier: Google_Function

serviceDefinition:

type: GoogleCloudFunctions

spec:

manifests:

- manifest:

identifier: GoogleFunction

type: GoogleCloudFunctionDefinition

spec:

store:

type: Harness

spec:

files:

- /GoogleFunctionDefinition.yaml

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: gcp_connector

project: cd-play

bucket: cloud-functions-automation-bucket

artifactPath: helloworld

identifier: helloworld

type: GoogleCloudStorage

EOT

}

To configure a Harness Cloud Function service in the Harness Manager, do the following:

- In your project, in CD (Deployments), select Services.

- Select Manage Services, and then select New Service.

- Enter a name for the service and select Save.

- Select Configuration.

- In Service Definition, select Google Cloud Functions.

- In Function Definition, enter the manifest YAML. You have two options.

- You can add the manifest YAML inline.

- Select Add Function Definition and connect Harness with the Git repo where the manifest YAML is located. You can also use the Harness File Store.

- In Artifacts, add the Google Cloud Storage location of the ZIP file that corresponds to the manifest YAML.

- Select Save.

Using service variables in manifest YAML

Service variables are a powerful way to template your services or make them more dynamic.

In the Variables section of the service, you can add service variables and then reference them in any of the manifest YAML file you added to the service.

For example, you could create a variable named entryPoint for the manifest entryPoint setting and set its value as a fixed value, runtime input, or expression.

Next, in your manifest YAML file, you could reference the variable like this (see <+serviceVariables.entryPoint>):

Now, when you add that service to a pipeline, you will be prompted to enter a value for this variable in the pipeline Services tab. The value you provide is then used as the entryPoint in your manifest YAML.

Cloud Functions environments

The Cloud Function environment contains an Infrastructure Definition that identifies the GCP account, project, and region to use.

Here's an example of a Cloud Functions environment.

- YAML

- API

- Terraform Provider

- Harness Manager

environment:

name: GCF

identifier: GCF

description: "GCF environment"

tags: {}

type: PreProduction

orgIdentifier: default

projectIdentifier: serverlesstest

variables: []

Create an environment using the Create Environments API.

curl -i -X POST \

'https://app.harness.io/gateway/ng/api/environmentsV2?accountIdentifier=<account_id>' \

-H 'Content-Type: application/json' \

-H 'x-api-key: <token>' \

-d '{

"orgIdentifier": "default",

"projectIdentifier": "CD_Docs",

"identifier": "GCF",

"tags": {

"property1": "",

"property2": ""

},

"name": "GCF",

"description": "",

"color": "",

"type": "PreProduction",

"yaml": "environment:\n name: GCF\n identifier: GCF\n description: \"dev google cloud environment\"\n tags: {}\n type: PreProduction\n orgIdentifier: default\n projectIdentifier: serverlesstest\n variables: []"

}'

For the Terraform Provider resource, go to harness_platform_environment and harness_platform_environment_service_overrides.

Here's an example of harness_platform_environment:

resource "harness_platform_environment" "example" {

identifier = "GCF"

name = "GCF"

org_id = "default"

project_id = "myproject"

tags = ["foo:bar", "baz"]

type = "PreProduction"

## ENVIRONMENT V2 Update

## The YAML is needed if you want to define the Environment Variables and Overrides for the environment

## Not Mandatory for Environment Creation nor Pipeline Usage

yaml = <<-EOT

environment:

name: GCF

identifier: GCF

description: ""

tags: {}

type: Production

orgIdentifier: default

projectIdentifier: myproject

variables: []

EOT

}

To create an environment, do the following:

- In your project, in CD (Deployments), select Environments.

- Select New Environment.

- Enter a name for the new environment.

- In Environment Type, select Production or Pre-Production. The Production or Pre-Production settings can be used in Harness RBAC to restrict who can deploy to these environments.

- Select Save. The new environment is created.

Pipelines require that an environment have an infrastructure definition. We'll cover that next.

Define the infrastructure

You define the target infrastructure for your deployment in the Environment settings of the pipeline stage. You can define an environment separately and select it in the stage, or create the environment within the stage Environment tab.

There are two methods of specifying the deployment target infrastructure:

- Pre-existing: the target infrastructure already exists and you simply need to provide the required settings.

- Dynamically provisioned: the target infrastructure will be dynamically provisioned on-the-fly as part of the deployment process.

For details on Harness provisioning, go to Provisioning overview.

GCP connector

You will need a Harness GCP Connector with correct permissions to deploy Cloud Functions in GCP.

You can pick the same GCP connector you used in the Harness service to connect to Google Cloud Storage for the artifact, or create a new connector.

Pre-existing Functions infrastructure

- YAML

- API

- Terraform Provider

- Harness Manager

Here's a YAML example of a Cloud Function infrastructure definition.

infrastructureDefinition:

name: dev

identifier: dev

description: "dev google cloud infrastructure"

tags: {}

orgIdentifier: default

projectIdentifier: serverlesstest

environmentRef: dev

deploymentType: GoogleCloudFunctions

type: GoogleCloudFunctions

spec:

connectorRef: gcp_connector

project: cd-play

region: us-central1

allowSimultaneousDeployments: false

Create an infrastructure definition using the Create Infrastructure API.

curl -i -X POST \

'https://app.harness.io/gateway/ng/api/infrastructures?accountIdentifier=<account_Id>' \

-H 'Content-Type: application/json' \

-H 'x-api-key: <token>' \

-d '{

"identifier": "dev",

"orgIdentifier": "default",

"projectIdentifier": "serverlesstest",

"environmentRef": "dev",

"name": "dev",

"description": "",

"tags": {

"property1": "1",

"property2": "2"

},

"type": "Asg",

"yaml": "infrastructureDefinition:\n name: dev\n identifier: dev\n description: \"dev google cloud infrastructure\"\n tags: {}\n orgIdentifier: default\n projectIdentifier: serverlesstest\n environmentRef: dev\n deploymentType: GoogleCloudFunctions\n type: GoogleCloudFunctions\n spec:\n connectorRef: gcp_connector\n project: cd-play\n region: us-central1\n allowSimultaneousDeployments: false"

}'

For the Terraform Provider resource, go to harness_platform_infrastructure.

Here's an example of harness_platform_infrastructure:

resource "harness_platform_infrastructure" "example" {

identifier = "dev"

name = "dev"

org_id = "default"

project_id = "serverlesstest"

env_id = "dev"

type = "GoogleCloudFunctions"

deployment_type = "GoogleCloudFunctions"

yaml = <<-EOT

infrastructureDefinition:

name: dev

identifier: dev

description: "dev google cloud infrastructure"

tags: {}

orgIdentifier: default

projectIdentifier: serverlesstest

environmentRef: dev

deploymentType: GoogleCloudFunctions

type: GoogleCloudFunctions

spec:

connectorRef: gcp_connector

project: cd-play

region: us-central1

allowSimultaneousDeployments: false

EOT

}

To create the ASG infrastructure definition in an environment, do the following:

In your project, in CD (Deployments), select Environments.

Select the environment where you want to add the infrastructure definition.

In the environment, select Infrastructure Definitions.

Select Infrastructure Definition.

In Create New Infrastructure, in Name, enter a name for the new infrastructure definition.

In Deployment Type, select Google Cloud Functions.

In Google Cloud Provider Details, in Connector, select or create a Harness GCP connector that connects Harness with the account where you want the Cloud Function deployed.

You can use the same GCP connector you used when adding the Cloud Function artifact in the Harness service. Ensure the GCP credentials in the GCP connector credentials meets the requirements.

In Project, select the GCP project where you want to deploy.

In Region, select the GCP region where you want to deploy.

Select Save.

The infrastructure definition is added.

Dynamically provisioned Functions infrastructure

Currently, the dynamic provisioning documented in this topic is behind the feature flag CD_NG_DYNAMIC_PROVISIONING_ENV_V2. Contact Harness Support to enable the feature.

Here is a summary of the steps to dynamically provision the target infrastructure for a deployment:

Add dynamic provisioning to the CD stage:

In a Harness Deploy stage, in Environment, enable the option Provision your target infrastructure dynamically during the execution of your Pipeline.

Select the type of provisioner that you want to use.

Harness automatically adds the provisioner steps for the provisioner type you selected.

Configure the provisioner steps to run your provisioning scripts.

Select or create a Harness infrastructure in Environment.

Map the provisioner outputs to the Infrastructure Definition:

- In the Harness infrastructure, enable the option Map Dynamically Provisioned Infrastructure.

- Map the provisioning script/template outputs to the required infrastructure settings.

Supported provisioners

The following provisioners are supported for Google Functions deployments:

- Terraform

- Terragrunt

- Terraform Cloud

- Shell Script

Adding dynamic provisioning to the stage

To add dynamic provisioning to a Harness pipeline Deploy stage, do the following:

In a Harness Deploy stage, in Environment, enable the option Provision your target infrastructure dynamically during the execution of your Pipeline.

Select the type of provisioner that you want to use.

Harness automatically adds the necessary provisioner steps.

Set up the provisioner steps to run your provisioning scripts.

For documentation on each of the required steps for the provisioner you selected, go to the following topics:

- Terraform:

- Terraform Plan

- Terraform Apply

- Terraform Rollback. To see the Terraform Rollback step, toggle the Rollback setting.

- Terragrunt

- Terraform Cloud

- Shell Script

Mapping provisioner output

Once you set up dynamic provisioning in the stage, you must map outputs from your provisioning script/template to specific settings in the Harness Infrastructure Definition used in the stage.

In the same CD Deploy stage where you enabled dynamic provisioning, select or create (New Infrastructure) a Harness infrastructure.

In the Harness infrastructure, in Select Infrastructure Type, select Google Cloud Platform if it is not already selected.

In Google Cloud Provider Details, enable the option Map Dynamically Provisioned Infrastructure.

A Provisioner setting is added and configured as a runtime input.

Map the provisioning script/template outputs to the required infrastructure settings.

To provision the target deployment infrastructure, Harness needs specific infrastructure information from your provisioning script. You provide this information by mapping specific Infrastructure Definition settings in Harness to outputs from your template/script.

For Google Cloud Functions, Harness needs the following settings mapped to outputs:

- Project

- Region

Ensure the Project and Region settings are set to the Expression option.

For example, here's a snippet of a Terraform script that provisions the infrastructure for a Cloud Function deployment and includes the required outputs:

provider "google" {

credentials = file("<PATH_TO_YOUR_GCP_SERVICE_ACCOUNT_KEY_JSON>")

project = "<YOUR_GCP_PROJECT_ID>"

region = "us-central1" # Replace with your desired region

}

resource "google_project_service" "cloudfunctions" {

project = "<YOUR_GCP_PROJECT_ID>"

service = "cloudfunctions.googleapis.com"

}

resource "google_cloudfunctions_function" "my_function" {

name = "my-cloud-function"

description = "My Cloud Function"

runtime = "nodejs14"

trigger_http = true

available_memory_mb = 256

timeout = "60s"

# Optionally, you can define environment variables for your function

environment_variables = {

VAR1 = "value1"

VAR2 = "value2"

}

}

output "project_name" {

value = google_cloudfunctions_function.my_function.project

}

output "region_name" {

value = google_cloudfunctions_function.my_function.region

}

In the Harness Infrastructure Definition, you map outputs to their corresponding settings using expressions in the format <+provisioner.OUTPUT_NAME>, such as <+provisioner.project_name>.

Cloud Functions execution strategies

Harness ASG deployments support the following deployment strategies:

- Basic

- Canary

- Blue Green

Basic

The basic deployment execution strategy uses the Deploy Cloud Function step. The Deploy Cloud Function step deploys the new function version and routes 100% of traffic over to the new function version.

YAML example of Deploy Cloud Function step

execution:

steps:

- step:

name: Deploy Cloud Function

identifier: deployCloudFunction

type: DeployCloudFunction

timeout: 10m

spec: {}

Canary

Harness provides a step group to perform the canary deployment.

The step group consists of:

- Deploy Cloud Function With No Traffic step: deploys the new function version but does not route any traffic over to the new function version.

- First Cloud Function Traffic Shift step: routes 10% of traffic over to the new function version.

- Second Cloud Function Traffic Shift step: routes 100% of traffic over to the new function version.

YAML example of canary deployment step group

execution:

steps:

- stepGroup:

name: Canary Deployment

identifier: canaryDepoyment

steps:

- step:

name: Deploy Cloud Function With No Traffic

identifier: deployCloudFunctionWithNoTraffic

type: DeployCloudFunctionWithNoTraffic

timeout: 10m

spec: {}

- step:

name: Cloud Function Traffic Shift

identifier: cloudFunctionTrafficShiftFirst

type: CloudFunctionTrafficShift

timeout: 10m

spec:

trafficPercent: 10

- step:

name: Cloud Function Traffic Shift

identifier: cloudFunctionTrafficShiftSecond

type: CloudFunctionTrafficShift

timeout: 10m

spec:

trafficPercent: 100

Blue Green

Harness provides a step group to perform the blue green deployment.

The step group consists of:

- Deploy Cloud Function With No Traffic step: deploys the new function version but does not route any traffic over to the new function version.

- Cloud Function Traffic Shift step: routes 100% of traffic over to the new function version.

You can also route traffic incrementally using multiple Cloud Function Traffic Shift steps with gradually increasing routing percentages.

YAML example of blue green deployment step group

execution:

steps:

- stepGroup:

name: Blue Green Deployment

identifier: blueGreenDepoyment

steps:

- step:

name: Deploy Cloud Function With No Traffic

identifier: deployCloudFunctionWithNoTraffic

type: DeployCloudFunctionWithNoTraffic

timeout: 10m

spec: {}

- step:

name: Cloud Function Traffic Shift

identifier: cloudFunctionTrafficShift

type: CloudFunctionTrafficShift

timeout: 10m

spec:

trafficPercent: 100

Rollbacks

If deployment failure occurs, the stage or step failure strategy is initiated. Typically, this runs the Rollback Cloud Function step in the Rollback section of Execution. Harness adds the Rollback Cloud Function step automatically.

The Harness rollback capabilities are based on the Google Cloud Function revisions available in Google Cloud.

If the function already exists (for example, revision 10) and a new revision is deployed (for example, revision 11) but a step after the deployment there is a failure and rollback is triggered, then Harness will deploy a new function revision (revision 12) but it will contain the artifact and metadata for revision 10.